by Jan Brosowski, Ulrich Conrad, Hans-Juergen Denecke, Victor Galis, Gia-Khanh Nguyen, Pierre Reynes, and Xirui Yang

If you are deploying SAP systems on Oracle Solaris, this article provides storage configuration and optimization recommendations for Oracle Database and SAP file systems that are NFS-mounted from an Oracle ZFS Storage Appliance system.

|

Introduction

This article explains optimizations, recommendations, and best practices gathered from testing done in engineering environments and customer implementations, and from related installation and configuration documentation. Sources include engineering deployments of Oracle Optimized Solution for SAP, Oracle Solution Center customer projects, the SAP product installation guide, and the SAP Community Network document "Recommended mount options for read-write directories," which is also available as My Oracle Support document 1567137.1. (Access to My Oracle Support documents requires logon and authentication. Please see the references at the end of this article for links to these documents, other sources, and useful reading.)

To develop this article, Oracle engineers implemented an example Oracle Optimized Solution for SAP configuration to support typical enterprise resource planning (ERP) and SAP Enterprise Portal workloads, applying identified best practices and recommendations. This testing helped engineers validate the solution architecture and optimize the storage configuration.

| |

|

Oracle Optimized Solutions provide tested and proven best practices for how to run software products on Oracle systems. Learn more.

|

|

Table of Contents

About Oracle ZFS Storage Appliance

The Oracle ZFS Storage Appliance family of products comprises hybrid storage systems powered by a multithreaded symmetric multiprocessing (SMP) operating system. These systems are based on an innovative cache-centric architecture that features massive dynamic random access memory (DRAM) and flash-based caches. This design supplies unprecedented caching performance for both reads and writes, eliminating the need for large numbers of hard disk drive spindles that are otherwise necessary for fast I/O operations in traditional network-attached storage (NAS) solutions. In addition, the appliances' design is flexible, scaling throughput, processor performance, and storage capacity easily as storage needs change.

Oracle ZFS Storage Appliance systems offers several physical connectivity options and support multiple storage protocols. They can be connected to multiple heterogeneous physical connections such as Ethernet, InfiniBand, or Fibre Channel networks. Supported storage protocols include NFS (Network File System), SMB (Server Message Block), HTTP (HyperText Transfer Protocol), TFTP/SFTP/FTP (Trivial File, Secure or SSH File, and simple File Transfer Protocols), iSCSI LUNs (Internet Small Computer System Interface LUNs), NDMP (Network Data Management Protocol), SRP (Secure Remote Password), and native Fibre Channel LUNs.

Oracle Optimized Solution for SAP incorporates an Oracle ZFS Storage Appliance system because it offers flexible, easy-to-manage, and highly available shared storage for both SAP and Oracle Database file systems, while at the same time delivering high performance for many Oracle Database and SAP instances.

Oracle ZFS Storage Appliance systems offer cloud-converged storage, optimized for Oracle software. These systems can act as a native cloud gateway, seamlessly moving data from on-premises environments to Oracle Public Cloud. During testing for this Oracle Optimized Solution, an Oracle ZFS Storage ZS3-4 appliance was used to provide NFS shares for data access and iSCSI LUNs for Oracle Solaris Cluster quorum devices. The storage appliance was configured for on-premises storage only.

For more information about Oracle ZFS Storage Appliance features and architecture, see the white paper "Architectural Overview of the Oracle ZFS Storage Appliance."

File System Location and Sharing

In an SAP deployment, not all files can or should be stored on a shared file system, and when they are, not all files should be stored in the same way. This section describes directories that must be on a local file system, those that can be stored on shared file systems, and those that must be stored on shared file systems. For file systems that are shared, there are different ways in which they can be shared: across multiple SAP systems, dedicated within an SAP system ID (SID), or dedicated to a specific Oracle Solaris Zone or zone cluster. Tables 1, 2, and 3 list typical file systems in an SAP and Oracle Database deployment on an Oracle ZFS Storage Appliance system, along with file sharing requirements and recommendations.

Note: According to SAP Note 527843 for Oracle Real Application Clusters (Oracle RAC), the Oracle Grid Infrastructure feature of Oracle Database must be installed locally on each cluster node. (Access to SAP Notes requires logon and authentication to the SAP Marketplace.) The default local folder name for Oracle Grid Infrastructure in an SAP environment is /oracle/GRID. It is also recommended to put /oracle/oraInventory on the local file system. In the example test deployment of Oracle Optimized Solution for SAP, we put the directory /oracle on a local file system while creating separate shared file systems on an Oracle ZFS Storage Appliance system for each subfolder of /oracle/<SID>/*, along with a shared file system for /oracle/client.

Table Legend

√ Required/Recommended

ο Possible

Table 1. Sharing Requirements for SAP File Systems

| File System | Local | Common Share Across Multiple SAP Systems | Dedicated Shared Within Each SAP System | Dedicated Shared Within an Oracle Solaris Zone Cluster (high availability [HA]) or Oracle Solaris Zone (non-HA) |

| /usr/sap/hostctrl | √ | | | |

| /sapmnt/<SID> | | | √ | |

| /usr/sap | | ο | ο | √ |

| /usr/sap/trans | | √ | | |

Table 2. Sharing Requirements for Oracle RAC File Systems

| File System | Local | Dedicated Shared Within Each SAP System | Dedicated Shared Within an Oracle Solaris Zone Cluster |

| /var/opt/oracle | √ | | |

| /usr/local/bin | √ | | |

| /oracle | √ | | ο |

| /oracle/GRID | √ | | |

| /oracle/oraInventory | √ | | ο |

| /oracle/BASE | √ | | ο |

| /oracle/stage | ο | | √ |

| /oracle/client | | √ | |

| /oracle/<SID> | √ | | ο |

| /oracle/<SID>/<release> | | | √ |

| Voting Disks | | | √ |

| Oracle Cluster Registry | | | √ |

| /oracle/<SID>/*(except folders listed above) | | | √ |

Table 3. Sharing Requirements for Failover of Single-Instance Oracle Database File Systems

| File System | Local | Dedicated Shared Within Each SAP System | Dedicated Shared Within an Oracle Solaris Zone Cluster |

| /var/opt/oracle | √ | | |

| /usr/local/bin | √ | | |

| /oracle | ο | | √ |

| /oracle/oraInventory | ο | | √ |

| /oracle/stage | ο | | √ |

| /oracle/client | | √ | |

| /oracle/<SID> | ο | | √ |

| /oracle/<SID>/<release> | | | √ |

| /oracle/<SID>/*(except folders listed above) | | | √ |

Projects and File System Layout on Oracle ZFS Storage Appliance

This section describes appliance projects and file systems, along with their respective configuration options, indicating how they were created in the example deployment of Oracle Optimized Solution for SAP. Several factors—including workload, data consistency, security, and storage capacity requirements—determine the layout of projects and file systems on the appliance.

Workload Impact on Layout of Storage Pools and Projects

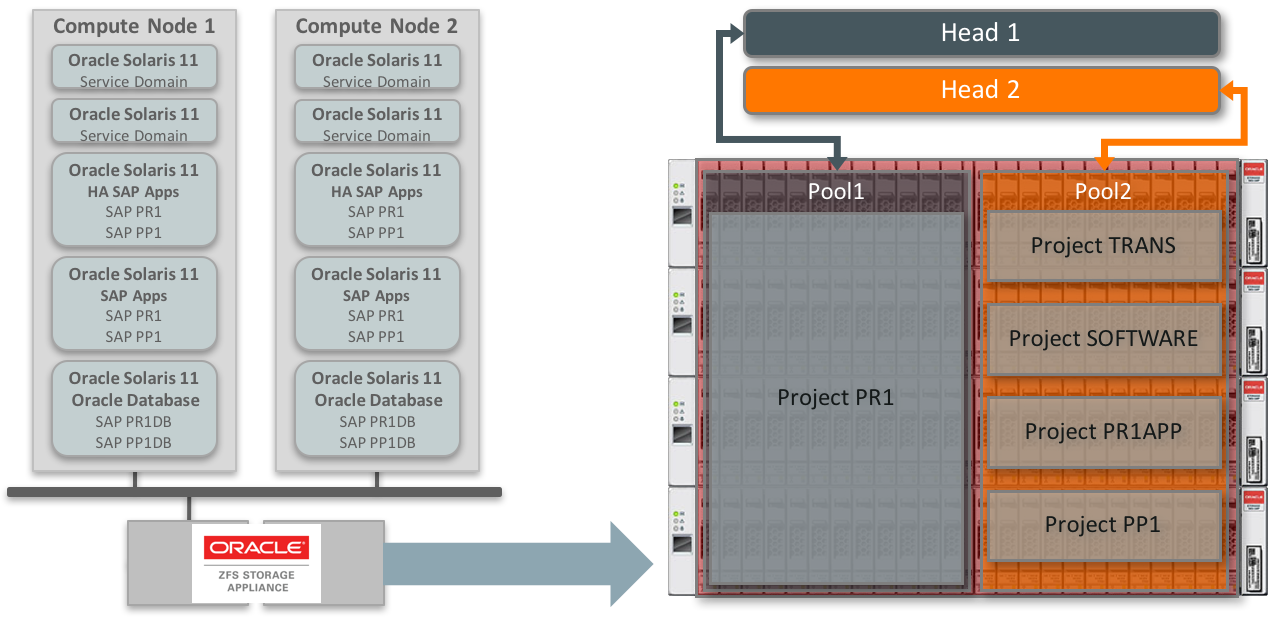

In the example deployment, the Oracle ZFS Storage Appliance system was configured with two controller heads in active-active mode to support two SAP systems (PR1 and PP1). One storage pool was created per appliance head (pool1 on head 1 and pool2 on head 2), each using half the disk drives available (see the technical white paper "Secure Consolidation of SAP Systems on Highly Available Oracle Infrastructure" for more details).

The layout was designed across the two clustered controller heads to accommodate the specific I/O requirements for the PR1 and PP1 SAP system workloads. In the example solution, the PR1 system is an ERP system with heavy database workloads using an Oracle RAC database, while the PP1 system is an SAP Enterprise Portal system using a single-instance Oracle Database configuration with failover (Figure 1).

Note: For more information on the benefits of clustering Oracle ZFS Storage Appliance controller heads, see the technical white paper "Best Practices for Oracle ZFS Storage Appliance Clustered Controllers".

Figure 1: SAP system configuration and storage pools in the example deployment.

Based on workload requirements, it was decided to dedicate the first controller head exclusively to Oracle Database storage needs for SAP system PR1. The second controller head serves the rest of the storage needs for the infrastructure, namely Oracle Database for SAP system PP1, the binaries and SAP file systems for both SAP systems, iSCSI LUNs for clustering requirements, and boot and swap devices for the virtualized environments.

After the storage pools are created, projects must be created on the appliance to define groups of shares with common administrative and storage settings. In addition to simplifying storage administration, projects are created in line with other important criteria, such as data consistency and security requirements.

Project Layout Impact on Data Consistency

Each project acts as a data consistency group for all the shares it contains. Therefore, we recommend creating projects for each individual database and its application. This provides a level of atomic consistency when performing snapshots or replication. When data transactions use multiple shares, integrity between the shares needs to be guaranteed for the transactions. When a project is defined for the database and associated SAP application, each share within the project is then replicated to the same target on the same schedule with the same options as the parent project, and each share is replicated in the same stream using the same project-level snapshots as other shares inheriting the project's configuration. This capability is important for applications that require data consistency among multiple shares, and influenced project and share placements.

Project Layout Impact on Security

Projects also define a common administrative control point for managing shares and enable authorized administrative access using a role-based access control (RBAC) security model. For more information on the appliance's administrative model, see the Oracle ZFS Storage Appliance Security Guide.

SAP Storage Space Requirements

Table 4 summarizes capacity recommendations for SAP storage on a per-instance basis. To support multiple instances, the recommended space requirements must be multiplied accordingly.

Table 4. SAP Storage Space Requirements

| Application Instance | Minimum Storage Space Requirement (per Instance) |

| SAP Central Services (SCS) instance | 2 GB |

| Enqueue Replication Server (ERS) instance for the SCS, if required | 2 GB |

| ABAP SAP Central Services (ASCS) instance | 2 GB |

| Enqueue Replication Server (ERS) instance for the ASCS, if required | 2 GB |

| Primary Application Server (PAS) instance | 5 GB |

| Additional Application Server (AAS) instance | 5 GB |

Storage Layout

Given the workload, data consistency, security, and storage capacity requirements discussed above, we created the storage pools, projects, and shares on the Oracle ZFS Storage Appliance system using the structure and options shown in Table 5. The storage layout outlined here should be modified according to your own implementation-specific workload and application requirements.

Projects and File Systems for Pool1 on Head 1

As mentioned previously, because of the database-intensive nature of the ERP workload, we decided to dedicate the first controller head of the Oracle ZFS Server Appliance system exclusively to Oracle Database file systems for SAP system PR1. Table 5 lists the file systems created in Pool1 for SAP system PR1 and the respective file system properties (record size, logbias value, primarycache value, and compression). (For descriptions of these options, see "Project Properties" in the Oracle ZFS Storage Appliance Administration Guide).

Note: Depending on the workload profile, the record size of the sapdata* file systems may need to be adjusted, increasing the size if there are predominantly sequential read/write operations, or decreasing it if there are mostly random I/O operations.

Table 5. Properties of Projects and File Systems Created in Pool1

| Head | Project | File System | Record Size | logbias Value | primarycache Value | Compression |

| 1 | PR1 | PR1 project (settings inherited as indicated) | 8 KB | Latency | All data and metadata | Off |

| 12102 | 128 KB | Latency | All data and metadata | Off |

| backup | 128 KB | Throughput | No cache | LZJB |

| cfgtoollogs | Inherited from project |

| crs1 | Inherited from project |

| crs2 | Inherited from project |

| crs3 | Inherited from project |

| mirrlogA | 128 KB | Latency | No cache | Off |

| mirrlogB | 128 KB | Latency | No cache | Off |

| oraarch | 128 KB | Throughput | No cache | Off |

| oracle-client | 128 KB | Latency | All data and metadata | Off |

| oraflash | 64 KB | Throughput | No cache | Off |

| origlogA | 128 KB | Latency | No cache | Off |

| origlogB | 128 KB | Latency | No cache | Off |

| saparch | 128 KB | Throughput | No cache | LZJB |

| sapbackup | 64 KB | Throughput | No cache | Off |

| sapcheck | 64 KB | Throughput | No cache | Off |

| sapdata1 | 8 KB | Throughput | All data and metadata | Off |

| sapdata2 | 128 KB | Throughput | No cache | Off |

| sapdata3 | Inherited from project |

| sapdata4 | 8 KB | Throughput | All data and metadata | Off |

| sapprof | 128 KB | Throughput | No cache | Off |

| sapreorg | 64 KB | Latency | All data and metadata | Off |

| saptrace | Inherited from project |

Table 6 lists the mount points for the file systems created in Pool1.

Table 6. File Systems and Mount Points for Oracle RAC Files

| Head | Project | File System | Oracle ZFS Storage Appliance Mount Point | NFS Mount Point |

| 1 | PR1 | 12102 | /export/sapt58/PR1/12102 | /oracle/PR1/12102

(Mounted in any zone where PR1 database will run) |

| backup | /export/sapt58/PR1/backup | Backup folder for PR1 database |

| cfgtoollogs | /export/sapt58/PR1/cfgtoollogs | /oracle/PR1/cfgtoollogs |

| crs1 | /export/sapt58/PR1/crs1 | /oracle/PR1/crs1 |

| crs2 | /export/sapt58/PR1/crs2 | /oracle/PR1/crs2 |

| crs3 | /export/sapt58/PR1/crs3 | /oracle/PR1/crs3 |

| mirrlogA | /export/sapt58/PR1/mirrlogA | /oracle/PR1/mirrlogA |

| mirrlogB | /export/sapt58/PR1/mirrlogB | /oracle/PR1/mirrlogB |

| oraarch | /export/sapt58/PR1/oraarch | /oracle/PR1/oraarch |

| oracle-client | /export/sapt58/PR1/oracle-client | /oracle/client

(Mounted in any zone where PR1 database and SAP system PR1 application servers will run) |

| oraflash | /export/sapt58/PR1/oraflash | /oracle/PR1/oraflash |

| origlogA | /export/sapt58/PR1/origlogA | /oracle/PR1/origlogA |

| origlogB | /export/sapt58/PR1/origlogB | /oracle/PR1/origlogB |

| saparch | /export/sapt58/PR1/saparch | /oracle/PR1/saparch |

| sapbackup | /export/sapt58/PR1/sapbackup | /oracle/PR1/sapbackup |

| sapcheck | /export/sapt58/PR1/sapcheck | /oracle/PR1/sapcheck |

| sapdata1 | /export/sapt58/PR1/sapdata1 | /oracle/PR1/sapdata1 |

| sapdata2 | /export/sapt58/PR1/sapdata2 | /oracle/PR1/sapdata2 |

| sapdata3 | /export/sapt58/PR1/sapdata3 | /oracle/PR1/sapdata3 |

| sapdata4 | /export/sapt58/PR1/sapdata4 | /oracle/PR1/sapdata4 |

| sapprof | /export/sapt58/PR1/sapprof | /oracle/PR1/sapprof |

| sapreorg | /export/sapt58/PR1/sapreorg | /oracle/PR1/sapreorg |

| saptrace | /export/sapt58/PR1/saptrace | /oracle/PR1/saptrace |

Projects and File Systems for Pool2 on Head 2

Pool2 on the second controller head serves the rest of the storage needs for the infrastructure: the file systems for the PP1 database, the binaries and SAP file systems for both SAP systems, iSCSI LUNs for clustering, and boot and swap devices for all virtual servers. Table 7 lists the file systems created in Pool2 and their properties (record size, logbias value, primarycache value, and compression value).

Note: Depending on the workload profile, the record size of the sapdata* may have to be adjusted, increasing the size if there are predominantly sequential read/write operations, or decreasing it if there are mostly random I/O operations.

Table 7. Properties of Projects and File Systems Created in Pool2

| Head | Project | File System | Record Size | logbias Value | primarycache Value | Compression |

| 2 | TRANS | | 128 KB | Throughput | No cache | LZJB |

| usr-sap-trans | Inherited from project |

| SOFTWARE | | 128 KB | Throughput | No cache | LZJB |

| software | Inherited from project |

| PR1APP | | 128 KB | Throughput | No cache | Off |

| sapmnt | Inherited from project |

| usr-sap-aas-01 | Inherited from project |

| usr-sap-aas-02 | Inherited from project |

| usr-sap-ascs | Inherited from project |

| usr-sap-haapps | Inherited from project |

| PP1 | | 128 KB | Throughput | All data and metadata | Off |

| 11204 | 128 KB | Latency | All data and metadata | Off |

| backup | 128 KB | Throughput | No cache | LZJB |

| cfgtoollogs | Inherited from project |

| mirrlogA | 128 KB | Latency | No cache | Off |

| mirrlogB | 128 KB | Latency | No cache | Off |

| oraarch | Inherited from project |

| oracle | 128 KB | Latency | All data and metadata | Off |

| oracle-client | 128 KB | Latency | All data and metadata | Off |

| oraflash | 64 KB | Throughput | No cache | Off |

| origlogA | 128 KB | Latency | No cache | Off |

| origlogB | 128 KB | Latency | No cache | Off |

| saparch | 128 KB | Throughput | No cache | LZJB |

| sapbackup | 64 KB | Throughput | No cache | Off |

| sapcheck | 64 KB | Throughput | No cache | Off |

| sapdata1 | 8 KB | Throughput | All data and metadata | Off |

| sapdata2 | Inherited from project |

| sapdata3 | 8 KB | Throughput | No cache | Off |

| sapdata4 | 8 KB | Throughput | All data and metadata | Off |

| sapmnt | Inherited from project |

| sapprof | 128 KB | Throughput | No cache | Off |

| sapreorg | 64 KB | Latency | All data and metadata | Off |

| saptrace | Inherited from project |

| stage | 64 KB | Throughput | No cache | Off |

| usr-sap-aas-01 | Inherited from project |

| usr-sap-aas-02 | Inherited from project |

| usr-sap-scs | Inherited from project |

| usr-sap-haapps | Inherited from project |

Table 8 through Table 12 list the mount points for each file system created in Pool2. Because Oracle Database instances and SAP system PR1 and PP1 instances are running in different zone clusters, several NFS mount points must be defined as mount points on the corresponding servers (that is, on specific zones and zone clusters).

Table 8. File Systems and Mount Points for Binaries for Single-Instance Oracle Database Configuration

| Head | Project | File System | Oracle ZFS Storage Appliance Mount Point | NFS Mount Point |

| 2 | PP1 | oracle | /export/sapt58/PP1/oracle | /oracle

(Mounted in any zone where PP1 database will run) |

| oracle-client | /export/sapt58/PP1/oracle-client | /oracle/client

(Mounted in any zone where PP1 database and SAP system PP1 application servers will run) |

| 11204 | /export/sapt58/PP1/11204 | /oracle/PP1/11204

(Mounted in any zone where PP1 database will run) |

| backup | /export/sapt58/PP1/backup | Backup folder for PP1 database |

Table 9. File Systems and Mount Point for Files for Single-Instance Oracle Database Configuration

| Head | Project | File System | Oracle ZFS Storage Appliance Mount Point | NFS Mount Point |

| 2 | PP1 | cfgtoollogs | /export/sapt58/PP1/cfgtoollogs | /oracle/PP1/cfgtoollogs |

| mirrlogA | /export/sapt58/PP1/mirrlogA | /oracle/PP1/mirrlogA |

| mirrlogB | /export/sapt58/PP1/mirrlogB | /oracle/PP1/mirrlogB |

| oraarch | /export/sapt58/PP1/oraarch | /oracle/PP1/oraarch |

| oraflash | /export/sapt58/PP1/oraflash | /oracle/PP1/oraflash |

| origlogA | /export/sapt58/PP1/origlogA | /oracle/PP1/origlogA |

| origlogB | /export/sapt58/PP1/origlogB | /oracle/PP1/origlogB |

| saparch | /export/sapt58/PP1/saparch | /oracle/PP1/saparch |

| sapbackup | /export/sapt58/PP1/sapbackup | /oracle/PP1/sapbackup |

| sapcheck | /export/sapt58/PP1/sapcheck | /oracle/PP1/sapcheck |

| sapdata1 | /export/sapt58/PP1/sapdata1 | /oracle/PP1/sapdata1 |

| sapdata2 | /export/sapt58/PP1/sapdata2 | /oracle/PP1/sapdata2 |

| sapdata3 | /export/sapt58/PP1/sapdata3 | /oracle/PP1/sapdata3 |

| sapdata4 | /export/sapt58/PP1/sapdata4 | /oracle/PP1/sapdata4 |

| sapprof | /export/sapt58/PP1/sapprof | /oracle/PP1/sapprof |

| sapreorg | /export/sapt58/PP1/sapreorg | /oracle/PP1/sapreorg |

| saptrace | /export/sapt58/PP1/saptrace | /oracle/PP1/saptrace |

Table 10. File Systems and Mount Points for SAP System PR1

| Head | Project | File System | Oracle ZFS Storage Appliance Mount Point | NFS Mount Point |

| 2 | PR1APP | usr-sap-ascs | /export/sapt58/PR1APP/usr-sap-ascs | /usr/sap

(Mounted in zone clusters epr1-ascs-01 and epr1-ascs-02) |

| usr-sap-haapps | /export/sapt58/PR1APP/usr-sap-haapps | /usr/sap

(Mounted in zone clusters epr1-haapps-01 and epr1-haapps-02) |

| usr-sap-aas-01 | /export/sapt58/PR1APP/usr-sap-aas-01 | /usr/sap

(Mounted in the zone for SAP additional application servers without HA in Node 1) |

| usr-sap-aas-02 | /export/sapt58/PR1APP/usr-sap-aas-02 | /usr/sap

(Mounted in the zone for SAP additional application servers without HA in Node 2) |

| sapmnt | /export/sapt58/PR1APP/sapmnt | /sapmnt/PR1

(Mounted in any zone where SAP system PR1 and PR1 database will run) |

Table 11. File Systems and Mount Points for SAP System PP1

| Head | Project | File System | Oracle ZFS Storage Appliance Mount Point | NFS Mount Point |

| 2 | PP1 | usr-sap-scs | /export/sapt58/PP1/usr-sap-scs | /usr/sap

(Mounted in zone clusters epp1-scs-01 and epp1-scs-02) |

| usr-sap-haapps | /export/sapt58/PP1/usr-sap-haapps | /usr/sap

(Mounted in zone clusters epp1-haapps-01 and epp1-haapps-02) |

| sapmnt | /export/sapt58/PP1/sapmnt | /sapmnt/PP1

(Mounted in any zone where SAP system PP1 and PP1 database will run) |

Table 12. File Systems and Mount Points Common to SAP Systems PR1 and PP1

| Head | Project | File System | Oracle ZFS Storage Appliance Mount Point | NFS Mount Point |

| 2 | TRANS | usr-sap-trans | /export/sapt58/TRANS/usr-sap-trans | /usr/sap/trans

(Mounted in any zone where SAP systems PR1 and PP1 will run) |

Enabling Jumbo Frames

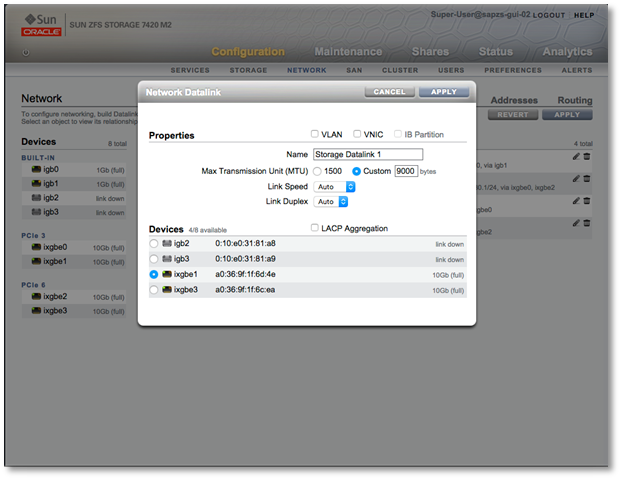

The maximum transmission unit (MTU) size is the amount of data communicated in a single Ethernet network packet; by default, the MTU size is 1,500 bytes. By increasing the MTU size to 9,000 bytes (enabling jumbo frames), each packet carries more data with less protocol overhead, resulting in greater network efficiency, lower I/O latency, and higher throughput. When deploying Oracle Optimized Solution for SAP, the use of jumbo frames is recommended for the private network that interconnects shared storage to the SAP and Oracle Database instances. Note that you must configure jumbo frame support on both the server and storage sides of the network, as well as on any network device in between, physical or virtual.

Enabling Support for Jumbo Frames on the Server

This command configures the MTU size in the primary domain on the server during virtual switch configuration. VLAN 105 is used for the storage network.

root@sapt58-ctrl-01:~# ldm add-vsw net-dev=net5 mtu=9000 vid=102,103,105 pri-priv-vsw primary

Listing 1: Configuring jumbo frames on the server.

For more information, see "How to Configure Virtual Network and Virtual Switch Devices to Use Jumbo Frames" in the Oracle VM Server for SPARC 3.4 Administration Guide. If the environment is not virtualized using Oracle VM Server for SPARC, see "Enabling Support for Jumbo Frames" in the Oracle Solaris guide, Configuring and Administering Network Components in Oracle Solaris 11.2.

Enabling Support for Jumbo Frames on the Storage

Once the MTU size is set on the server side, it is necessary to configure the network datalinks on the Oracle ZFS Storage Appliance system. To create the datalinks using the browser interface (Figure 2) on the appliance, navigate to Configuration -> Network and click the + icon next to Datalinks. Enter the datalink name as Storage Datalink 1, and set the MTU size to 9,000 bytes to enable jumbo frames. Select the first device, ixgbe1, and click Apply.

Figure 2: Configuring jumbo frames on device ixgbe1.

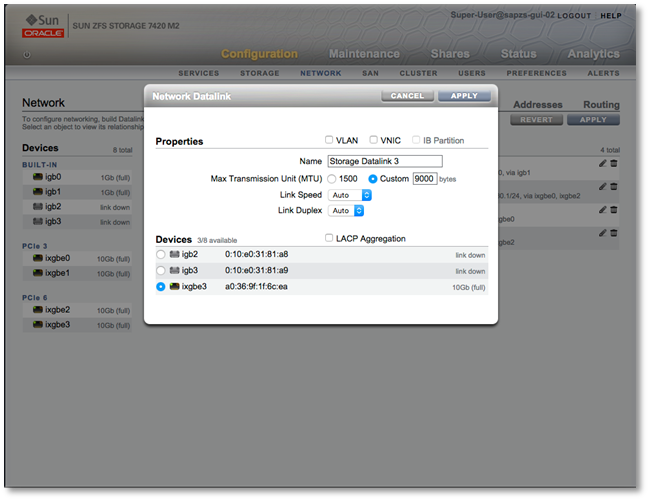

Repeat the process for device ixgbe3. Click the + icon next to Datalinks, enter the datalink name as Storage Datalink 3, and set the MTU size to 9,000 bytes to enable jumbo frames. Select device ixgbe3 and click Apply.

Figure 3: Configuring jumbo frames on device ixgbe3.

NFS Mount Options

This article focuses on SAP systems deployed on Oracle Solaris with NFS-mounted file systems on NAS storage, specifically file systems on an Oracle ZFS Storage Appliance system. The NFS mount option recommendations given here are for SAP and Oracle Database file systems that reside on the appliance. As with other best practices and recommendations in this article, the parameters and options listed here are based on internal engineering deployments, customer implementations, and related documentation.

Figure 4 depicts virtual servers in the example deployment that mount SAP and Oracle Database file systems. These virtual servers are constructed using Oracle's built-in virtualization technologies: Oracle VM Server for SPARC logical domains (LDOMs) and Oracle Solaris Zones. To configure some servers for HA, certain zones are clustered using the Oracle Solaris Cluster software. This section lists example /etc/vfstab entries used to mount file systems for the virtual servers. Figure 4 shows the naming conventions for the domains, zones, and zone clusters that comprise the virtual servers.

Figure 4: Virtualization layout for example deployment of Oracle Optimized Solution for SAP.

Recommended NFS Mount Options for SAP File Systems

In a typical SAP environment, SAP application servers access the following shared file systems:

/sapmnt is the base directory for the SAP system. It contains executable kernel programs, log files, and profiles./usr/sap contains files for the operation of SAP application instances. It should be mounted in any zone running SAP instances./usr/sap/trans is the global transport directory for all SAP systems. It contains data and log files for objects transported between SAP systems. This transport directory needs to be accessible by at least one application server instance of each SAP system, but preferably by all instances.

Table 13 shows mount option recommendations for SAP file systems. Note that in different zone clusters, the file system /usr/sap might be different shares. In the example deployment of Oracle Optimized Solution for SAP, there are two different /usr/sap shares: one for each SAP system, PR1 and PP1. As shown in Table 13, in SAP system PR1, the first /usr/sap share is used by the ASCS and Enqueue Replication Server (ERS) instances and must be mounted from the zones running ASCS and ERS (these zones are named epr1-ascs-01 and epr1-ascs-02). The second /usr/sap share is for the PAS and AAS instances and must be mounted from the zones running PAS and AAS (epr1-haapps-01 and epr1-haapps-02). This means that the appropriate /usr/sap share should be mounted only in the corresponding zone.

Table 13. Recommended Mount Options for SAP File Systems

| File System | Oracle Solaris Zone | Mount Options |

| /sapmnt/<SID> | Every SAP zone | rw,bg,hard,[intr],rsize=1048576,wsize=1048576,proto=tcp,vers=3 |

| Every Oracle Database zone | rw,bg,hard,nointr,rsize=1048576,wsize=1048576,vers=3,proto=tcp |

| /usr/sap

(for ASCS and ERS instances) | Every zone where the corresponding SAP instance(s) might run | rw,bg,hard,[intr],rsize=1048576,wsize=1048576,proto=tcp,vers=3 |

| /usr/sap

(for PAS and AAS instances) | Every zone where the corresponding SAP instance(s) might run | rw,bg,hard,[intr],rsize=1048576,wsize=1048576,proto=tcp,vers=3 |

| /usr/sap/trans | Every SAP zone | rw,bg,hard,[intr],rsize=1048576,wsize=1048576,proto=tcp,vers=3 |

Important: The file system /sapmnt/<SID> must be mounted in every zone where the corresponding SAP instances will run. Because of the BR*Tools, /sapmnt/<SID> also must be mounted in the zones where Oracle Database will run.

The SAP file systems under Oracle Solaris Cluster control must have the mount option intr (instead of nointr) to allow signals to interrupt file operations on these mount points. Using the nointr option instead would prevent the sapstartsrv process from being successfully stopped in the event that a file system server is unresponsive.

Examples of /etc/vfstab entries in Application Domains

In the example deployment depicted in Figure 4, there are two application domains: one for mission-critical SAP components and application servers (HA APP) and another for SAP application servers that do not require high availability (APP). This section gives examples of /etc/vfstab entries for the zones and zone clusters in these two application domains.

Note that in the zone clusters, except for the /export/software file system, the mount at boot option is set to no because the file systems are managed as Oracle Solaris Cluster storage resources. In the zones without HA (in which Oracle Solaris Cluster resources are not used), the file systems are configured with the mount at boot option set to yes.

HA APP Domain (sapt58-haapp-0x)

Listing 2 through Listing 4 give the /etc/vfstab entries used to mount SAP and Oracle Database file systems in virtual servers in the HA APP domain.

root@sapt58-haapp-01:~# cat /etc/vfstab | grep izfssa

izfssa-02:/export/sapt58/SOFTWARE/software - /export/software nfs - yes

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

root@sapt58-haapp-01:~#

Listing 2: Example /etc/vfstab entry for global zone sapt58-haapp-01.

root@sapt58-haapp-01:~# cat /zones/pr1-haapps-zc/root/etc/vfstab | grep izfssa

izfssa-02:/export/sapt58/SOFTWARE/software - /export/software nfs - yes

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

izfssa-01:/export/sapt58/PR1/sapmnt - /sapmnt/PR1 nfs - no

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

izfssa-01:/export/sapt58/PR1/usr-sap - /usr/sap nfs - no

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

izfssa-02:/export/sapt58/TRANS/trans - /usr/sap/trans nfs - no

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

izfssa-02:/export/sapt58/ORACLE/oracle - /oracle nfs - no

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

root@sapt58-haapp-01:~#

Listing 3: Example /etc/vfstab entry for zone cluster pr1-haapps-zc.

root@sapt58-haapp-01:~# cat /zones/pr1-ascs-zc/root/etc/vfstab | grep izfssa

izfssa-02:/export/sapt58/SOFTWARE/software - /export/software nfs - yes

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

izfssa-01:/export/sapt58/PR1/sapmnt - /sapmnt/PR1 nfs - no

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

izfssa-01:/export/sapt58/PR1/usr-sap - /usr/sap nfs - no

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

izfssa-02:/export/sapt58/TRANS/trans - /usr/sap/trans nfs - no

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

root@sapt58-haapp-01:~#

Listing 4: Example /etc/vfstab entry for zone cluster pr1-ascs-zc.

APP Domain (sapt58-app-0x)

Listing 5 and Listing 6 show example /etc/vfstab entries that mount SAP and Oracle Database file systems in zones in the APP domain. Because these zones support non-HA applications, Oracle Solaris Cluster is not used to manage zone resources. As a result, the file systems are mounted with the mount at boot option set to yes.

root@sapt58-app-01:~# cat /etc/vfstab | grep izfssa

izfssa-02:/export/sapt58/SOFTWARE/software - /export/software nfs - yes

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

root@sapt58-app-01:~#

Listing 5: Example /etc/vfstab entry for global zone sapt58-app-01.

root@sapt58-app-01:~# cat /zones/pr1-aas-01/root/etc/vfstab | grep izfssa

izfssa-02:/export/sapt58/SOFTWARE/software - /export/software nfs - yes

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

izfssa-01:/export/sapt58/PR1/sapmnt - /sapmnt/PR1 nfs - yes

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

izfssa-01:/export/sapt58/PR1/usr-sap - /usr/sap nfs - yes

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

izfssa-02:/export/sapt58/TRANS/trans - /usr/sap/trans nfs - yes

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

izfssa-02:/export/sapt58/ORACLE/oracle - /oracle nfs - yes

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

root@sapt58-app-01:~#

Listing 6: Example /etc/vfstab entry for zones pr1-aas-01 and pr1-aas-02.

NFS Mount Options for Oracle Database File Systems

This section describes the recommended NFS mount options for Oracle Database file systems that support SAP systems on Oracle Solaris and are NFS-mounted from an Oracle ZFS Storage Appliance system.

As described in this article, the example deployment uses Oracle Database file systems based on recommendations in the SAP installation guide, SAP Note 527843, and test implementations for Oracle Optimized Solution for SAP. (Access to SAP Notes requires logon and authentication to the SAP Marketplace.) The recommended mount options for Oracle Database file systems originate from My Oracle Support document 1567137.1. (Access to My Oracle Support documents requires logon and authentication to My Oracle Support.)

Note: For optimal performance, the option forcedirectio should be set on all file systems that contain Oracle Database data files. It should not be used on file systems that contain binaries.

Table 14 through Table 16 show mount option recommendations for Oracle Database file systems.

Table 14. Mount Options for Single-Instance Database Configuration

| File System | Mount Options |

| Data files | rw,bg,hard,nointr,rsize=1048576,wsize=1048576,vers=3,proto=tcp,noac,suid,forcedirectio |

| Binaries | rw,bg,hard,nointr,rsize=1048576,wsize=1048576,vers=3,proto=tcp,suid |

Table 15. Mount Options for Oracle Database with Oracle RAC

| File System | Mount Options |

| Data files | rw,bg,hard,nointr,rsize=1048576,wsize=1048576,vers=3,proto=tcp,noac,suid,forcedirectio |

| Binaries | rw,bg,hard,nointr,rsize=1048576,wsize=1048576,vers=3,proto=tcp,noac,suid |

Table 16. Mount Options for the Cluster Ready Services Voting Disk or Oracle Cluster Registry Components or Oracle Clusterware

| File System | Mount Options |

| Voting Disk or Oracle Cluster Registry | rw,bg,hard,nointr,rsize=1048576,wsize=1048576,vers=3,proto=tcp,noac,forcedirectio |

Note: For Oracle Solaris, the default NFS mount options include suid, so unless nosuid is explicitly set, suid would still be implicitly set.

Examples of /etc/vfstab Entries in the Database Domain

Listing 7 and Listing 8 show example /etc/vfstab entries used to mount Oracle Database file systems in the global zone and zone cluster associated with the database domain. Note that in the zone cluster for Oracle Database, the mount at boot option is set to no (except for the /export/software file system) because the file systems for Oracle Database are managed as Oracle Solaris Cluster storage resources.

root@sapt58-db-01:~# cat /etc/vfstab | grep izfssa

izfssa-02:/export/sapt58/SOFTWARE/software - /export/software nfs - yes

rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

root@sapt58-db-01:~#

Listing 7: Example /etc/vfstab entry for global zone sapt58-db-01.

izfssa-02:/export/sapt58/SOFTWARE/software - /export/software nfs - yes rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

izfssa-02:/export/sapt58/ORACLE/pr1 - /oracle/PR1/121 nfs - no rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

izfssa-01:/export/sapt58/PR1/stage - /oracle/stage nfs - no rw,bg,hard,nointr,rsize=32768,wsize=32768,proto=tcp,vers=3

izfssa-01:/export/sapt58/PR1/crs1 - /oracle/PR1/crs1 nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/crs2 - /oracle/PR1/crs2 nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/crs3 - /oracle/PR1/crs3 nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/origlogA - /oracle/PR1/origlogA nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/origlogB - /oracle/PR1/origlogB nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/mirrlogA - /oracle/PR1/mirrlogA nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/mirrlogB - /oracle/PR1/mirrlogB nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/oraarch - /oracle/PR1/oraarch nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/oraflash - /oracle/PR1/oraflash nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/sapbackup - /oracle/PR1/sapbackup nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,sui

izfssa-01:/export/sapt58/PR1/sapcheck - /oracle/PR1/sapcheck nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/sapdata1 - /oracle/PR1/sapdata1 nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

\izfssa-01:/export/sapt58/PR1/sapdata2 - /oracle/PR1/sapdata2 nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/sapdata3 - /oracle/PR1/sapdata3 nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/sapdata4 - /oracle/PR1/sapdata4 nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/sapdata5 - /oracle/PR1/sapdata5 nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/sapreorg - /oracle/PR1/sapreorg nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

izfssa-01:/export/sapt58/PR1/saparch - /oracle/PR1/saparch nfs - no rw,bg,hard,nointr,rsize=1048576,wsize=1048576,proto=tcp,noac,forcedirectio,vers=3,suid

Listing 8: Example /etc/vfstab entries for zone cluster pr1-db-zc.

Creating and Mounting File Systems in Database Zones

Listing 9 and Listing 10 show how to create and mount nested file systems in the database zones pr1-db-01 and pr1-db-02, respectively.

root@epr1-db-01:~# mkdir -p /oracle/PR1/121 /oracle/GRID /oracle/oraInventory /oracle/stage \

/oracle/PR1/crs1 /oracle/PR1/crs2 /oracle/PR1/crs3 /oracle/PR1/origlogA /oracle/PR1/origlogB \

/oracle/PR1/mirrlogA /oracle/PR1/mirrlogB /oracle/PR1/oraarch /oracle/PR1/oraflash \

/oracle/PR1/sapbackup /oracle/PR1/sapcheck /oracle/PR1/sapdata1 /oracle/PR1/sapdata2 \

/oracle/PR1/sapdata3 /oracle/PR1/sapdata4 /oracle/PR1/sapdata5 /oracle/PR1/sapreorg \

/oracle/PR1/saparch

root@epr1-db-01:~#

root@epr1-db-01:~# for fs in /oracle/PR1/121 /oracle/stage /oracle/PR1/crs1 /oracle/PR1/crs2 \

/oracle/PR1/crs3 /oracle/PR1/origlogA /oracle/PR1/origlogB /oracle/PR1/mirrlogA \

/oracle/PR1/mirrlogB /oracle/PR1/oraarch /oracle/PR1/oraflash /oracle/PR1/sapbackup \

/oracle/PR1/sapcheck /oracle/PR1/sapdata1 /oracle/PR1/sapdata2 /oracle/PR1/sapdata3 \

/oracle/PR1/sapdata4 /oracle/PR1/sapdata5 /oracle/PR1/sapreorg /oracle/PR1/saparch; do set -x; \

mount $fs; set +x; done

Listing 9: Creating mount points and mounting file systems in database zone pr1-db-01.

root@epr1-db-02:~# mkdir -p /oracle/PR1/121 /oracle/GRID /oracle/oraInventory /oracle/stage \

/oracle/PR1/crs1 /oracle/PR1/crs2 /oracle/PR1/crs3 /oracle/PR1/origlogA /oracle/PR1/origlogB \

/oracle/PR1/mirrlogA /oracle/PR1/mirrlogB /oracle/PR1/oraarch /oracle/PR1/oraflash \

/oracle/PR1/sapbackup /oracle/PR1/sapcheck /oracle/PR1/sapdata1 /oracle/PR1/sapdata2 \

/oracle/PR1/sapdata3 /oracle/PR1/sapdata4 /oracle/PR1/sapdata5 /oracle/PR1/sapreorg \

/oracle/PR1/saparch

root@epr1-db-02:~#

root@epr1-db-02:~# for fs in /oracle/PR1/121 /oracle/stage /oracle/PR1/crs1 /oracle/PR1/crs2 \

/oracle/PR1/crs3 /oracle/PR1/origlogA /oracle/PR1/origlogB /oracle/PR1/mirrlogA \

/oracle/PR1/mirrlogB /oracle/PR1/oraarch /oracle/PR1/oraflash /oracle/PR1/sapbackup \

/oracle/PR1/sapcheck /oracle/PR1/sapdata1 /oracle/PR1/sapdata2 /oracle/PR1/sapdata3 \

/oracle/PR1/sapdata4 /oracle/PR1/sapdata5 /oracle/PR1/sapreorg /oracle/PR1/saparch; do set -x; \

mount $fs; set +x; done

Listing 10: Creating mount points and mounting file systems in database zone pr1-db-02.

Mount Options for Oracle RMAN

Table 17 shows the recommended mount options for Oracle RMAN. For Oracle RMAN backup sets, image copies, and Data Pump (a feature of Oracle Database) dump files, the NOAC (No Attribute Cache) mount option should not be specified. Oracle RMAN and Data Pump do not check this option, and specifying it can adversely affect performance. When the NOAC option is set, clients do not cache file attributes locally; therefore, clients must check them on the server every time a specific file is accessed (for example, when Data Pump is appending the next few bytes of metadata or Oracle RMAN is changing some bytes during an incremental backup).

Table 17. Mount Options for Oracle RMAN

| File System | Mount Options |

| File system configured for Oracle RMAN | rw,bg,hard,nointr,rsize=1048576,wsize=1048576,vers=3,proto=tcp,forcedirectio |

Mount Options for Single-Instance Oracle Database Configuration with Failover

Table 18 lists file systems and mount options for a single-instance Oracle Database configuration with failover. In this environment, the database is deployed in a zone cluster using NAS storage. Oracle Automatic Storage Management (Oracle ASM) is not used.

Table 18. Mount Options for Single-Instance Oracle Database Configuration with Failover

| File System | Zone | Mount Options |

| /oracle | Every Oracle Database zone | rw,bg,hard,nointr,rsize=1048576,wsize=1048576,vers=3,proto=tcp,suid |

| /oracle/client | Every Oracle Database zone; every zone for SAP PAS and AAS instances | rw,bg,hard,nointr,rsize=1048576,wsize=1048576,vers=3,proto=tcp,suid |

| /oracle/<SID>/<release> | Every Oracle Database zone | rw,bg,hard,nointr,rsize=1048576,wsize=1048576,vers=3,proto=tcp,suid |

| /oracle/<SID>/origlogA | Every Oracle Database zone | rw,bg,hard,nointr,rsize=1048576,wsize=1048576,vers=3,forcedirectio,proto=tcp,suid |

| /oracle/<SID>/origlogB | Every Oracle Database zone | rw,bg,hard,nointr,rsize=1048576,wsize=10485 |