NoSQL DBAs on the Rise

by Przemyslaw Piotrowski

NoSQL and Big Data solutions are taking the world by storm and are increasingly being pushed into corporate environments for improving time to market and increasing development agility.

With the advent of new breeds of data management systems that build up on principles other than the relational algebra, a growing doubt has grown around the necessity and the role of the Database Administrator in this scenario. Even if most of these new systems are fully dependent on development teams and all maintenance efforts look redundant at first, once all production demands are considered it becomes crystal clear that Database Administrators need to remain a vital part of enterprise development chain. 24/7 availability, full transactional consistency and reliable recovery strategy cannot be dismissed when even remotely considering stepping away from relational database management system – whether transactional or warehouse-type. Read on to discover the many challenges and considerations about core database tasks, data model design and data itself to remain up-to-date on the ongoing technology drift to NoSQL and Big Data solutions.

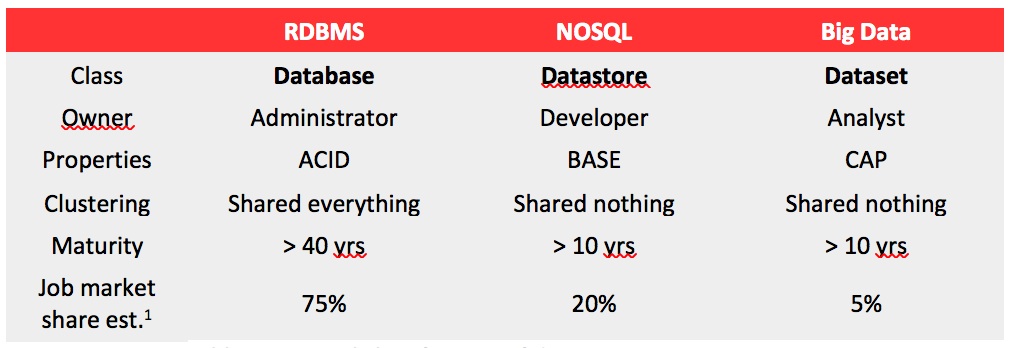

Leading market research companies anticipate exponential growth of NoSQL and Big Data systems with an increasing number of successful pilots in corporate environments that are achieving extreme capacity and massive throughput. While the market share of these systems is still largely uncharted, it should be expected that they will eventually begin to supplement and sometimes supersede traditional database technology. Table 1. depicts today's landscape of data management ecosystem, with naming conventions that are used throughout this article.

Table 1. General classification of data management systems.

Although the terms "NoSQL" and "Big Data" are frequently being used interchangeably, the distinction is evident with respect to their areas of usage, business strategy usefulness and ability to orchestrate highly-concurrent traffic. That being said, NoSQL fits more into OLTP space and Big Data is more of a warehouse-type.

Data Dawn

Relational database and Structured Query Language (which were invented in early 1970s) were quickly picked up by business because they offered a unique ability to abstract logical data layout from its physical location on disk. This made database development and usage much simpler and a lot more efficient. Donald D. Chamberlin and Raymond F. Boyce, the authors of SQL, considered Edgar F. Codd's relational model to be the simplest possible general-purpose data structure and were able to leverage that simplicity for designing a flexible querying language that remains vital to majority of today's IT systems. Relational algebra made even more sense in a way that normal form was extremely important when memory was scarce and storage was hardly in megabytes epoch, and when data had to be heavily normalized to keep storage costs acceptable.

Early NoSQL solutions were dubbed as having near-zero deployment barrier. Just like databases, data stores are similarly persistent, highly available, horizontally scalable and for most of the time consistent – with some caveats. The need for such solutions originally came from mammoth Internet companies which require massive bandwidth, also – or maybe even especially – on database tier.

Likewise, Big Data emerged from the need of the very same Internet behemoths, originating from the idea of storing massive amounts of user data for the purpose of tracking, personalizing content and offering bespoke user experience. Such immense data volumes have been long known to research studies like analyzing genomes, simulating earthquakes and forecasting weather however recent information explosion made heavy data consumption more appealing and affordable to businesses.

Many Ways to Say No

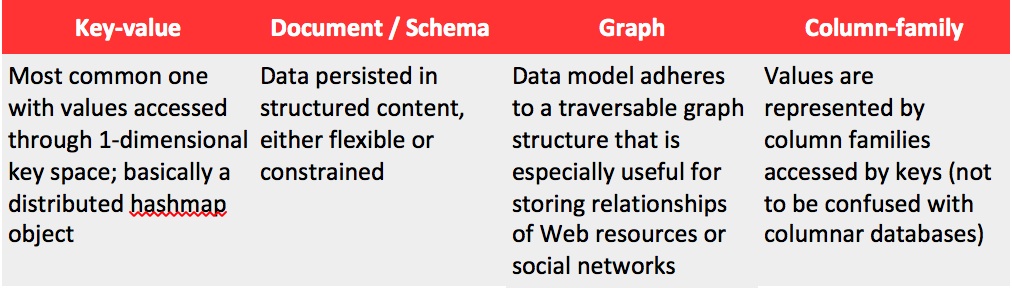

Having no central authority nor standards body, NoSQL solutions had to go the fragmentation way as each of those systems was initially designed as in-house technology and eventually released to the wild as an open source project. Implemented in different languages, with different objectives, different availability requirements and incompatible APIs, they still can be divided into four major kinds depicted in Table 2.

Table 2. Major Classifications of NoSQL Datastores.

Simple as they seem at first, all these data stores rely on the close relationship with application, with API being tightly integrated into app. Although there were some attempts to come up with a query language that would set common ground for NoSQL solutions, so far they received limited adoption from the community.

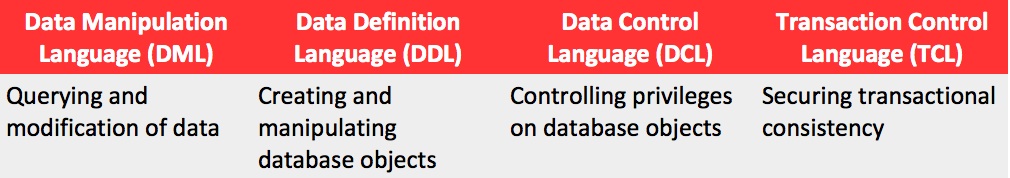

When running a relational database, a lot of time is being invested into data model design, architecture of supplemental structures such as constraints and indexes, and also analysis of future data access patterns. That investment pays off later with the ability to run a number of different workloads against that data, of either OLTP or DW characteristics. Even if much of that thought also goes to NoSQL systems' design, they are still deployed at more aggressive rates than traditional RDBMS solutions. What RDBMS had addressed with Data Manipulation Language (a subset of SQL), NoSQL systems must handle individually on a case-by-case basis by implementing code or providing query abstraction that is native to each feature or engine.

Some typical use cases mapped from the RDBMS world to the NoSQL environment are table joins, indexes and partitioning which usually require specific implementation within application layer. For SQL developers this seems like overkill as they are given an almost infinitely flexible tool which in the worst case can experience system slowness but almost rarely lack functionality.

Table 3. Structured Query Language subsets.

Due to the immense complexity these sub-languages hold, most NoSQL engines chose to skip on DDL, DCL and TCL functionality altogether, leaving only basic set and get operations available to the user. So even if initial software development process can seem more agile and appear as lean, further enhancements and continuous maintenance will eventually drift towards 'relational' budgets. Since these emerging technologies grow very fast at some point they will face the need to break backwards-compatibility, leaving applications on unsupported releases.

Are We Big Yet?

The boundaries where “traditional” data ends and Big Data begins are constantly being pushed out by the thriving storage capacity. Data that was considered big 10 years ago can now fit onto a portable hard drive. Many advisory and research companies have tried to come up with ballpark figures for estimating how much information remains in digitalized form today and how much is being generated on a yearly basis but the numbers oscillate from hundreds of exabytes to zettabytes.

Claims are being made that such amounts no longer fit into relational databases and must be transitioned into different paradigms. One such substitute is map-reduce, a breakthrough-yet-not-so-new algorithm that builds up on dividing data into independent chunks and processing them in parallel on commodity-hardware clusters. In its raw form, map-reduce is far from usable – especially to business – therefore shortly after getting introduced, it sprawled a number of wrappers, including SQL-compatible ones. Years forward, Big Data and relational databases get along very well with both-way compatibility through adapters and extensions. Data analyst will often sit with data architect or DBA to design ETL processes that span across multiple data layers and diverse data management systems. Also, end users are more likely connecting to database staging endpoint rather than query datasets directly.

Recent research makes it evident that corporate Big Data most often starts small, meaning that all new data projects are sprawling into datasets rather than go and convert existing databases. This usually attracts less attention from support groups and in turn requires “post-engineering” to prove its value, eventually.

The Database Administrator

Beyond everything that DBAs do, keeping data accessible to users remains a vital part of their job. If they fail to deliver service level agreements, business suffers and customers get hit. That availability is far more than just redundancy because it encompasses the means of delivering certain service levels like performance, throughput and capacity. Frequently, theDBA will be the meeting point between storage, networking and systems teams and will need to make the database resilient to degradation of any of those systems. A properly designed database system will bounce back not only during power surges or network outages but also during natural disasters or human error. It takes a combination of skills to effectively provision and maintain such environment and required decades of profession engineering to reach this point.

On the other hand, NoSQL builds upon the principles of horizontal scaling and redundancy to make capacity a linear function. However, this means every single query hitting the data store requires new lines of code. More importantly, security is an essential aspect to Big Data and NOSQL systems that often goes unnoticed despite its tremendous significance to enterprise operations. Most data stores and datasets are only protected in a sense of being isolated on a network, however all vendors equally agree today on recommending users to remain cautious and keep them tightly locked up as the security layer hasn't actually been built-in very well.

In the rapidly changing landscape of data management solutions it will become increasingly important to leverage as many of the relational skills and experiences into architecture and engineering of data stores and datasets. How to handle an Sarbanes-Oxley audit, how to handle a rolling upgrade, how to perform near-zero downtime migration, how to recover from a failover – that knowledge is already within today's DBA know-how. It just needs stretching out to the newly introduced data management disciplines.

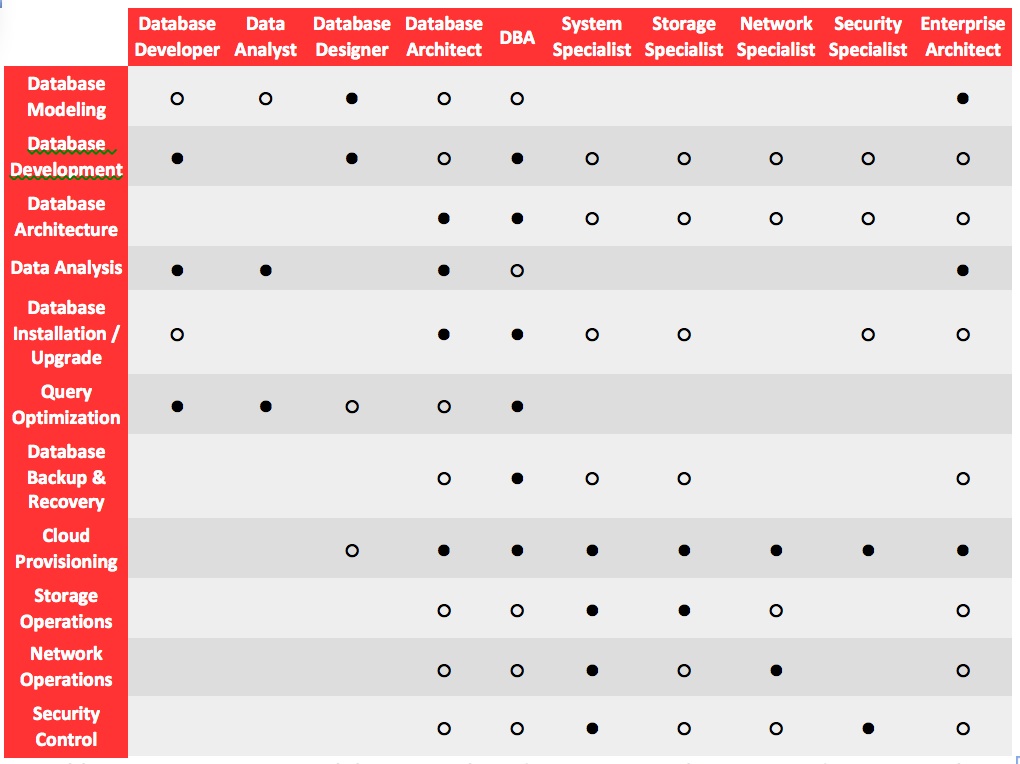

Different IT roles around database operations and support are listed in Table 4. and how they align in the enterprise.

Table 4. Enterprise responsibility matrix based on RDBMS architecture and common role separation pattern

(● direct, ○ indirect, none if blank).

Data is King

Observing the changing landscape of data management systems since 2000, it's a fairly safe bet to suppose that technology itself will start losing its importance while technology skills will thrive as data management software portfolio expands and diversifies. Although NoSQL and Big Data offer entirely new techniques for dealing with information, that proposition is still far from complete and this is where the DBA can fill the gap.

This in turn induces a major change in perspective and mindset for current relational technology experts. Techniques, algorithms and patterns that are common to NoSQL and Big Data can be planted into database systems to improve availability, capacity and performance. It has already started: map-reduce has been used on database warehouses for many years, just with different terminology. Similarly, key-value access patterns of data stores are not new at all to databases which were able to work asynchronously for decades. What did change was continuous breakthrough in hardware technology tied with evolving requirements for data availability and size. On the verge of breaking Moore's Law and just ahead of the coming in-memory revolution, it may turn out that all data management systems and skills must be thrown at problems so that only the combination of them wins.

About the Author

Przemyslaw Piotrowski is database expert with experience in design, development and support of large-scale computer systems. Przemyslaw is Oracle Database Certified Master specializing in High Availability, Performance Tuning and Emerging Technologies.

--------

1- Estimate based on job share as of May 2014. Source: Indeed, Monster, LinkedIn