How to Plan ZFS Storage for OpenStack on Oracle Solaris

by Cindy Swearingen

This article describes considerations for planning ZFS back-end storage pools that support your OpenStack on Oracle Solaris deployment.

Introduction

An easy way to get started with OpenStack is by downloading and installing the OpenStack Unified Archive (UAR) to create a single-system deployment that is designed for testing or evaluation. Be sure to review the README for system requirements.

OpenStack provides block storage (Cinder) and object storage (Swift) that can be deployed on Oracle Solaris ZFS or on Oracle ZFS Storage Appliance. This article also provides tips on how to monitor the ZFS back-end file systems and volumes that are created by Cinder or Swift in support of your OpenStack deployment.

Advantages of Running OpenStack Cinder and Swift on Oracle Solaris

There are several advantages of running OpenStack Cinder and Swift on Oracle Solaris:

- The back-end storage is ZFS and not a legacy file system.

- Zones on shared storage (ZOSS) support OpenStack instance migration.

- Hosting Swift data on a ZFS storage appliance uses Oracle Solaris NFSv4 extended attributes and locking, which is great for Swift.

- Oracle Solaris Cinder and Swift nodes can easily be deployed with a Unified Archive.

- There is no need to create three copies of Swift data. Use ZFS redundancy and store one copy.

- ZFS can automatically cache hot data to improve performance. A good example of this type of workload is booting many virtual machines (VMs).

- You can take advantage of integrated ZFS data services such as compression, snapshotting, and cloning.

- DTrace Python probes can be used to dynamically investigate Cinder or Swift services.

OpenStack Horizon Management

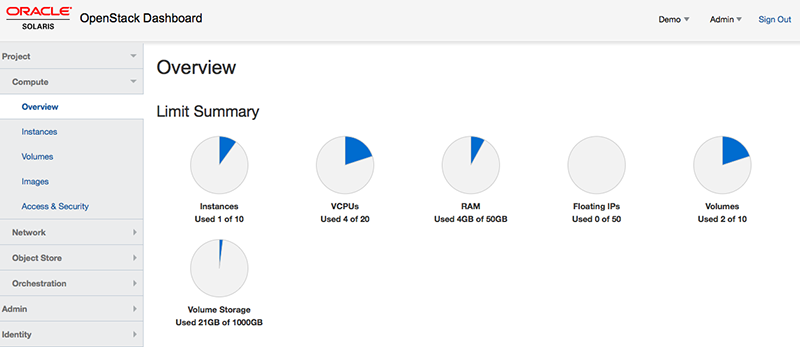

Most OpenStack management tasks are performed through the browser-based OpenStack Horizon interface (shown in Figure 1), which identifies the number of instances and vCPUs as well and the amount of RAM and available volume storage. Horizon provides a quick and easy way to deploy and manage instances in a multitenant environment. OpenStack also provides a set of command-line interfaces for managing your OpenStack deployment.

Figure 1. Horizon interface

General Considerations

You can review the available flavors in OpenStack Horizon to identify the default non-global zone and kernel zone allocations for CPU, memory, and disk storage. These flavors are customizable, so you can modify them to meet your business requirements.

These are the operating system and memory requirements:

- Control node: Global zone installed with Oracle Solaris 11.3 Beta or above

- Compute node: Sufficient RAM for deployed Nova instances, for example, approximately 8 GB for kernel zones and 2-4 GB for non-global zones. Additional CPUs for kernel zones as required.

- Storage node: 100–200 GB ZFS storage space for Glance images and Nova instances

You can customize your configuration by adding additional compute nodes and Cinder or Swift storage nodes. By default, zones that you create to be used as OpenStack instances are allocated in the root pool (rpool) unless you specify separate devices or ZFS volumes. By default, OpenStack instances of Oracle Solaris zones are also allocated in the root pool.

OpenStack Cinder Considerations

In addition, be aware of the following Cinder considerations:

-

If you add additional storage nodes, create a separate ZFS storage pool for Cinder to allocate ZFS volumes for kernel zone instances.

-

Cinder allocates volumes from the root pool by using this default entry in /etc/cinder/cinder.conf:

volume_driver=cinder.volume.drivers.solaris.zfs.ZFSVolumeDriver

-

If you are using SAN-based storage, modify /etc/cinder/cinder.conf to use the following driver:

cinder.volume.drivers.solaris.zfs.ZFSFCDriver

-

You can deploy Cinder on the ZFS storage appliance and use REST to manage Cinder volumes by following the steps described in the README.

OpenStack Swift Considerations

Also be aware of the following Swift considerations:

- You can deploy Swift on multiple storage nodes that point to an Oracle ZFS Storage Appliance. This provides convenience, redundancy, and replication, and you can reduce the number of Swift data copies to be stored.

- You can create a Unified Archive of the initial Swift node and then use it to replicate Swift storage services, as described in this blog.

Best Practices for Managing OpenStack Storage on ZFS

Here are some considerations for configuring ZFS pools:

- Create a separate ZFS storage pool for either Cinder or Swift storage to keep OpenStack data separate from the global zone's root pool.

- A mirrored ZFS pool performs best for small, random I/O operations.

- Reduce down time due to hardware failures by adding spares to your ZFS storage pool.

- Enable ZFS compression to reduce storage consumption.

- Using the steps provided in "ZFS Storage Pool Maintenance and Monitoring Practices," configure

smtp-notify to send an e-mail when a disk fails.

For more information and examples, see Managing ZFS File Systems in Oracle Solaris 11.3.

Here are some considerations for configuring zones:

- Non-global zones or kernel zones that are created as the golden image to be provided to Glance and deployed as OpenStack instances are stored in the root pool by default.

- A best practice is to allocate separate LUNs for the zones and use ZOSS for instance migration in a multinode environment.

Displaying ZFS File Systems That Support OpenStack

The general process for deploying OpenStack instances is as follows:

- Create and configure an Oracle Solaris zone, as needed for your tenant environment.

- Create a Unified Archive image of the configured zone.

- Give the image to Glance, which is used to create an OpenStack instance.

- Launch the OpenStack instance using a name and flavor of your choosing.

Then, a unique ID is automatically assigned to the OpenStack instance.

In the example below, a non-global zone named ozone1 and an image of that zone were created and previously provided to Glance on a single system. It was deployed as OpenStack instance ongz1 and the assigned instance number was 1.

When a non-global zone is allocated for an OpenStack instance, a ZFS file system based on the instance number is created in the root pool, for example:

# zfs list -r -d3 -o name -H rpool/VARSHARE/zonesrpool/VARSHARE/zones rpool/VARSHARE/zones/instance-00000001 rpool/VARSHARE/zones/instance-00000001/rpool rpool/VARSHARE/zones/instance-00000001/rpool/ROOT rpool/VARSHARE/zones/instance-00000001/rpool/VARSHARE rpool/VARSHARE/zones/instance-00000001/rpool/export

Creating Dedicated Storage for OpenStack Cinder

If you create a separate ZFS storage pool for Cinder and allocate kernel zones for your OpenStack instances, ZFS volumes are allocated in the separate pool.

You can easily change Cinder's volume base on a single-node configuration or on each of the Cinder storage nodes, as follows:

- Modify the

/etc/cinder/cinder.conf file's zfs_volume_base value to change it from rpool/cinder to tank/cinder.

- Restart the Cinder services.

In the following example, assume a kernel zone (okzone1) and an image were created and provided to Glance previously. Then, two instances (okz1 and okz2) from that image were launched. The assigned instance numbers are 2 and 3.

You can display information about the zone you created and also the zone instances that are launched from OpenStack Horizon by using the zoneadm command on the compute node:

# zoneadm list -cv ID NAME STATUS PATH BRAND IP 0 global running / solaris shared 3 ozone1 running /tank/zones/ozone1 solaris excl 5 instance-00000001 running solaris excl 6 okzone1 running - solaris-kz excl 8 instance-00000002 running - solaris-kz excl 10 instance-00000003 running - solaris-kz excl

Reviewing Cinder Volume Space Consumption

The following OpenStack nova command identifies the OpenStack instance (okz1) and its associated instance ID and volume ID:

# nova show okz1 | awk '/instance/ || /volume/'| OS-EXT-SRV-ATTR:instance_name | instance-00000003 | | os-extended-volumes:volumes_attached | [{"id": "93c0ebf0-1081-48ec-83ff-e77956c83da4"}] |

Then, you can associate the volume ID with the tank/cinder volume output, for example:

# zfs list -r tank/cinderNAME USED AVAIL REFER MOUNTPOINT tank/cinder 21.7G 1.05T 31K /tank/cinder tank/cinder/volume-93c0ebf0-1081-48ec-83ff-e77956c83da4 20.6G 1.05T 2.31G - tank/cinder/volume-f4a6be3c-980f-4f15-9172-b6fd44060c72 1.03G 1.05T 16K -

In the example above, Cinder created a ZFS volume in the separate pool/file system, tank/cinder, for each OpenStack instance. The size difference between the two volumes (20.6 GB versus 1.03 GB) is determined by the OpenStack instance flavor when the instances are launched.

You can confirm that the OpenStack instance okz1 was created with the _Oracle Solaris kernel zone – small flavo_r, which creates a 20 GB ZFS volume, through the Horizon interface and also with the following command:

# zfs get volsize tank/cinder/volume-93c0ebf0-1081-48ec-83ff-e77956c83da4NAME PROPERTY VALUE SOURCE tank/cinder/volume-93c0ebf0-… volsize 20G local

Be careful when comparing ZFS file system space utilization to the ZFS pool space consumption. The following zpool list output does not include space allocated for ZFS volumes.

# zpool list tankNAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT tank 1.09T 3.36G 1.08T 0% 1.00x ONLINE -

When managing Cinder volume space, use the zfs list command to identify volume space consumption.

This storage pool is a 1 TB storage pool that is currently using 21.7GB. The above space consumption matches what is shown on OpenStack Horizon's overview screen:

Volume Storage

Used 21GB of 1000GB

Summary

Running an OpenStack on Oracle Solaris deployment on ZFS provides many advantages. You can use OpenStack's browser-based tool, Horizon, to manage your OpenStack instances and use general ZFS commands to review your ZFS file systems and space usage.

Keep in mind that many of the details shown in the commands above are available in Horizon by clicking the instance name link or the instance volume link.

It's best to let Cinder manage ZFS volumes that are created through OpenStack Horizon or through Cinder commands. Do not attempt to use ZFS commands to change characteristics of existing Cinder volumes that are in use.

About the Author

Cindy Swearingen is an Oracle Solaris Product Manager who specializes in ZFS and storage features.

| Revision 1.0, 10/12/2015 |

Follow us:

Blog | Facebook | Twitter | YouTube