Scope

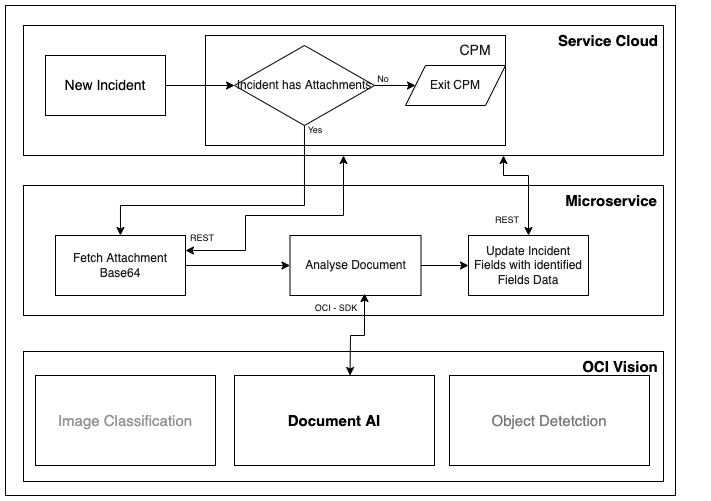

This article describes leveraging OCI vision to simplify the manual effort of an Oracle RightNow Service agent.

Overview

OCI Vision is an amazing AI service provided by Oracle Cloud Infrastructure, particularly for Documents and Images, which has very rich REST API endpoints and SDK. The IDEA behind this article is to utilize the Document AI service provided by OCI vision to automatically parse and collect information from documents/images attached to an Oracle RightNow Service Incident. Here we will be working on an example of a purchase receipt. When people raise a service request along with their purchase receipt (Either from the customer portal or through an E-mail) Agent doesn't need to manually read the content of the purchase order and fill it in the incident, The AI Service will do that for Agent. Let's go through the Solution one by one.

Solution Summary

We'll be creating a Typescript-based Express microservice (You can choose your own) as a middleware to communicate between Vision service and RightNow service. We'll be utilizing CPM ( Custom Process) to call this microservice whenever a new incident is created with attachments. CPMs/ Custom processes are predefined object event handlers in the RightNow service. We'll use Oracle RightNow service REST APIs to update the incident custom fields with the Fields identified by the OCI vision service. And we'll use the OCI typescript SDK to communicate with vision service (SDK is available for many languages)

Steps

Setup the Prerequisites

Create a microservice to communicate between RightNow service and OCI Vision service

Create a CPM to call the microservice whenever an incident is created with an attachment

Enable the CPM in service from Agent Desktop

Prerequisites

An OCI (Oracle Cloud Infrastructure) account has access to Vision services and REST APIs

A RightNow service instance, and a user with access to REST APIs and workspace Designer

An OCI config file was created to authenticate the Vision service

The config file should look like

[DEFAULT]

user=<you-user-ociid>

fingerprint=<auth-key-finger-print>

key_file=<path-to-oci-auth-key>

tenancy=<your-tenancy-ociid>

region=<oci-region>

Or Yo can use OCI CLI to create a config file for you

Install OCI CLI

use oci setup config command

Create custom fields to store data extracted from the receipt, using Agent Desktop. The following are the fields we are planning to extract using the vision service.

merchant_name

merchant_address

merchant_phone

total_amount

tax

subtotal

transaction_date

transaction_time

Node js 14 or above

Typescript 13 or above

Solution

Once the Prerequisites are ready let's build the microservice first.

Microservice

First, let's create a basic express Application with a single endpoint /vision

Open your terminal (command prompt or gitbash in windows)

Execute following commands

mkdir oci-vision service

cd oci-vision service

npm init -y

nano tsconfig.json

Paste the following configs in tsconfig.json

{

"compilerOptions": {

"module": "commonjs",

"esModuleInterop": true,

"target": "es6",

"noImplicitAny": true,

"moduleResolution": "node",

"sourceMap": true,

"outDir": "dist",

"baseUrl": ".",

"paths": {

"*": [

"node_modules/*"

]

}

},

"include": [

"src/**/*"

]

}

npm install --save express dotenv

npm install -save-dev @types/express typescript

mkdir src

create a file app.ts in the src directory and paste the following code

import express from 'express';

import bodyParser from 'body-parser';

import {ociVisionRoute} from './routes/ociVision';

import Logger from './libs/logger';

import 'dotenv/config';

const loggerMiddleware = (request: express.Request, response: express.Response, next: express.NextFunction) => {

Logger.info(`${request.method} ${request.path}`);

next();

};

const app: express.Application = express();

app.use(loggerMiddleware);

app.use(bodyParser.json());

app.use('/vision', ociVisionRoute);

app.listen(process.env.PORT, () => {

Logger.info(`OCI AI connector service and running on port: ${process.env.PORT}!`);

});

Once the basic application is ready let's move to the vision service integration. Here we'll be using typescript SDK for OCI. To install SDK, run the following command. SDK documentation can be found here

npm i oci-sdk

Now we'll create a .env file with environment variables for our microservice in the oci-vision-service directory

PORT=8000

OCI_PROFILE=DEFAULT

OCI_CONFIG_PATH=<path-to-the-oci-config-file >

SERVICE_CLOUD_REST_BASE_URL=https://< your-service-cloud-instance-interface-base-url >/services/rest/connect/v1.4/

BASIC_AUTH_TOKEN=<basic-auth-token-for-service-cloud-rest APIs>

Now we have installed the SDK and we have the environmental variables set to access the RightNow service REST APIs and vision services.

Let's authenticate the ai-vision in microservice.

Create a directory auth in src

Create a file ociAuthProvider.ts

And paste the following code in oci-auth-provider

import * as common from 'oci-common';

import 'dotenv/config';

const configurationFilePath: string = process.env.OCI_CONFIG_PATH;

const profile: string = process.env.OCI_PROFILE;

export const ociAuthProvider

: common.ConfigFileAuthenticationDetailsProvider = new common.ConfigFileAuthenticationDetailsProvider(

configurationFilePath,

profile

);

We have the authentication ready let's create a service class for calling vision service with a single function analyseDocument

Create a directory service in src

Create a file aiVision.ts in the service directory

And add the following snippets to call vision service

import * as vision from 'oci-aivision';

import {ociAuthProvider} from '../auth/ociAuthProvider';

import Logger from '../libs/logger';

export class AiVision {

private visionClient: vision.AIServiceVisionClient;

constructor() {

this.visionClient = new vision.AIServiceVisionClient({authenticationDetailsProvider: ociAuthProvider});

}

public async analyseDocument(documentRequest: vision.requests.AnalyzeDocumentRequest): Promise<vision.responses.AnalyzeDocumentResponse> {

try {

const response: vision.responses.AnalyzeDocumentResponse = await this.visionClient.analyzeDocument(documentRequest);

return response;

} catch (error) {

Logger.error(error);

throw error;

}

}

}

As we created a service class for connecting OCI vision we need to create a similar one for calling service cloud REST APIs. Here we'll have two functions getAttatchments and updateIncidents. The getAttachments function will return the base64 string of the attached document.

Create a file serviceCloudConnector.ts in the service directory

and paste the following snippet

import fetch from 'node-fetch';

import {IIncidentUpdateRequest} from '../interfaces/iIncidentUpdateRequest';

import {IAttachmentResponse} from '../interfaces/iAttachmentResponse';

import Logger from '../libs/logger';

import 'dotenv/config';

export class ServiceCloudConnector {

private headers: {[key:string]:string};

private baseUrl:string;

constructor() {

this.headers = {

'OSvC-CREST-Application-Context': 'vison integration poc',

'Authorization': `Basic ${process.env.BASIC_AUTH_TOKEN}`

};

this.baseUrl = process.env.SERVICE_CLOUD_REST_BASE_URL;

}

public async getAttatchments(incidentId: string, attatchmentId: string):Promise<IAttachmentResponse> {

try {

const options = {

method: 'GET',

headers: this.headers

};

const response = await fetch(`${this.baseUrl}incidents/${incidentId}/fileAttachments/${attatchmentId}/data`, options);

const data: IAttachmentResponse = await response.json();

return data;

} catch (error) {

throw Error(JSON.stringify(error));

}

}

public async updateIncident(incidentId: string, request: IIncidentUpdateRequest):Promise<IAttachmentResponse> {

try {

const options = {

method: 'PATCH',

headers: this.headers,

body: JSON.stringify(request)

};

const response = await fetch(`${this.baseUrl}incidents/${incidentId}`, options);

const data: IAttachmentResponse = await response.text();

Logger.error(data);

return data;

} catch (error) {

Logger.error(JSON.stringify(error));

throw Error(JSON.stringify(error));

}

}

}

You might have noticed that we have imported ociVisionRoute.ts in the app.ts file let's build that one

Create a directory called routes.ts

New file under the directory called ociVision.ts with following snippets

import express from 'express';

import * as vision from 'oci-aivision';

import {ServiceCloudConnector} from '../service/serviceCloudConnector';

import {AiVision} from '../service/aiVision';

import {IAttachmentResponse} from '../interfaces/iAttachmentResponse';

import {DocumentSource} from '../constants/documentSource';

import {FeatureType} from '../constants/featureType';

import {APIResponse} from '../utils/apiResponse';

import {ServiceCloudHelper} from '../helper/serviceCloudHelper';

import {IIncidentUpdateRequest} from '../interfaces/iIncidentUpdateRequest';

import Logger from '../libs/logger';

import {VisionRouteHelper} from '../helper/visionRouteHelper';

// eslint-disable-next-line new-cap

const ociVisionRoute: express.Router = express.Router();

const aiVision: AiVision = new AiVision();

const serviceCloudConnector: ServiceCloudConnector = new ServiceCloudConnector();

ociVisionRoute.post('/analyse-attatchment', async (request: express.Request, response: express.Response) => {

try {

const incidentId: string = request.body?.incidentId;

const attachmentId: string = request.body?.attachmentId;

if (incidentId && attachmentId) {

Logger.info(`Vision Service: Fetching attatchment base64 with ID =>${attachmentId}`);

const attachmentResponse: IAttachmentResponse = await serviceCloudConnector.getAttatchments(incidentId, attachmentId);

const visionRequest: vision.requests.AnalyzeDocumentRequest = {

analyzeDocumentDetails: {

document: {

data: attachmentResponse.data,

source: DocumentSource.INLINE

},

features: [{

featureType: FeatureType.KEY_VALUE_DETECTION

}]

}

};

Logger.info('Vision Service: Analysing doc');

const analysedData: vision.responses.AnalyzeDocumentResponse = await aiVision.analyseDocument(visionRequest);

const incidentRequest: IIncidentUpdateRequest = ServiceCloudHelper.buildUpdateIncidentCustomFieldsRequests(analysedData);

if (incidentRequest) {

Logger.info(`Vision Service: Updating incident : ID => ${incidentId}`);

await serviceCloudConnector.updateIncident(incidentId, incidentRequest);

}

response.send(APIResponse.wrapSuccess(analysedData));

} else {

VisionRouteHelper.handleMissingFields(incidentId, attachmentId, response);

}

} catch (error) {

Logger.error(error);

response.status(500).send(APIResponse.wrapError(error));

}

});

export {ociVisionRoute};

Here we have written two helper classes that'll parse and process data responses from service clod and vision service

Let's create a directory named helper to keep these helper classes.

import * as vision from 'oci-aivision';

import {IExtentedFieldValue} from '../interfaces/iExtentedFieldValue';

import {DocumentKeyMap} from '../constants/documentKeyMap';

import {IIncidentUpdateRequest, CutomFields} from '../interfaces/iIncidentUpdateRequest';

export class ServiceCloudHelper {

public static buildUpdateIncidentCustomFieldsRequests(analysedData: vision.responses.AnalyzeDocumentResponse): IIncidentUpdateRequest | null {

// eslint-disable-next-line id-length

const request: IIncidentUpdateRequest = {customFields: {c: {}}};

analysedData?.analyzeDocumentResult?.pages?.forEach((page) => {

if (page.documentFields && page.documentFields.length) {

page.documentFields.forEach((field: vision.models.DocumentField) => {

if (field.fieldLabel.name) {

const dataKey: keyof CutomFields = DocumentKeyMap.keysRequestParams[field.fieldLabel.name];

if (dataKey && dataKey === 'transaction_date') {

const date: Date = new Date((field.fieldValue as IExtentedFieldValue).value?.trim());

request.customFields.c[dataKey] = new Date(date.getTime() - (date.getTimezoneOffset() * 60000)).toISOString();

} else if (dataKey) {

request.customFields.c[dataKey] = (field.fieldValue as IExtentedFieldValue).value;

}

}

});

}

});

if (Object.keys(request.customFields.c).length) {

return request;

} else {

return null;

}

}

}

and the visionRouteHelper

import express from 'express';

import {APIResponse} from '../utils/apiResponse';

export class VisionRouteHelper {

public static handleMissingFields(incidentId: string, attachmentId: string, response: express.Response): void {

const missingFields: string[] = [];

if (!incidentId) missingFields.push('incidentId');

if (!attachmentId) missingFields.push('attachmentId');

response.status(400).send(APIResponse.wrapError({missingFields: missingFields}, 'Incomplete Request!'));

}

}

You might have noticed a few constants or enums used in the above codes.

these are kept under a directory called constants.

documentKeyMap.tsdocumentSource.tsfeatureType.ts

import {CutomFields} from '../interfaces/iIncidentUpdateRequest';

export class DocumentKeyMap {

public static keysRequestParams: { [key: string]: keyof CutomFields } = {

'MerchantName': 'merchant_name',

'MerchantAddress': 'merchant_address',

'MerchantPhoneNumber': 'merchant_phone',

'Total': 'total_amount',

'Tax': 'tax',

'Subtotal': 'subtotal',

'TransactionDate': 'transaction_date',

'TransactionTime': 'transaction_time'

};

}

export enum DocumentSource {

OBJECT_STORAGE = 'OBJECT_STORAGE',

INLINE = 'INLINE'

}

export enum FeatureType {

KEY_VALUE_DETECTION = 'KEY_VALUE_DETECTION',

TEXT_DETECTION = 'TEXT_DETECTION',

DOCUMENT_CLASSIFICATION = 'DOCUMENT_CLASSIFICATION',

LANGUAGE_CLASSIFICATION = 'LANGUAGE_CLASSIFICATION',

TABLE_DETECTION = 'TABLE_DETECTION'

}

Now the microservice is ready run the following code to run the application

tsc - compile the typescript application

node dist/app.js

Deploy this instance (or you can tunnel using any port tunneling tools for test purposes).

CPM to call the Microservice

Now we have to create a Custom Process to call the microservice whenever an incident is created with a new attachment.

Given here is the CPM to call the microservice whenever a new incident is created with an attachment. This is basically a PHP script.

Replace <your-microservice-domain-name> in the following snippet with your domain name of the microservice deployment

Do not remove comments in the custom script they are very important.

cpm.txt (3.16 KB)

try{

$response = curlRequest('http://<your-microservice-domain-name>/vision/analyse-attatchment','POST',$header,$request);

print_r($response);

}

catch(Exception $e){

echo $e->getMessage();

}

Demo

In the video, as you can see, we have uploaded a receipt along with a support request. And in the agent desktop side, the fields from the receipt are automatically identified and populated in the Incident custom field

References

OCI SDK

OCI Vision

CPMs

Express.js

Typescript