Hi All -

We are seeing very poor performance after enabling database links for our ETL application (Oracle ODI). Some tasks have shown improvement, others do not seem to be affected, but one in particular, is taking substantially longer.

Here's a look at the performance of the days before and after the db link changes (time is in minutes here -> duration | start time | end time):

Before

After

When I drill into these steps, I can see the specific tasks which are taking up the time.

In the no-db-link scenario, here is the code

(on source - an EBS database 11.2.0.4)

select

TO_CHAR(SQ_FND_FLEX_VALUE_HIER_PS.FLEX_VALUE_SET_ID) || '~' || SQ_FND_FLEX_VALUE_HIER_PS.FLEX_VALUE || '~' ||

COALESCE(SQ_FND_FLEX_VALUE_HIER_PS.PARENT_FLEX_VALUE, '') C1_INTEGRATION_ID,

TO_DATE('01/01/1899 00:00:00', 'MM/DD/YYYY HH24:MI:SS') C2_SRC_EFF_FROM_DT

from

( /* Subselect from SDE_ORA_Stage_GLSegmentDimension_Hierarchy_Primary.W_GL_SEGMENT_HIER_PS_PE_SQ_FND_FLEX_VALUE_NORM_HIER

*/

select

/*+ USE_NL(B H) */

B.FLEX\_VALUE\_SET\_ID FLEX\_VALUE\_SET\_ID,

H.PARENT_FLEX_VALUE PARENT_FLEX_VALUE,

B.FLEX_VALUE FLEX_VALUE,

MAX(H.CREATION_DATE) CREATION_DATE,

MAX(H.LAST_UPDATE_DATE) LAST_UPDATE_DATE,

MAX(H.CREATED_BY) CREATED_BY,

MAX(H.LAST_UPDATED_BY) LAST_UPDATED_BY

from APPS.FND_FLEX_VALUES B, APPS.FND_FLEX_VALUE_NORM_HIERARCHY H

where (1=1)

And (H.FLEX_VALUE_SET_ID=B.FLEX_VALUE_SET_ID AND B.FLEX_VALUE BETWEEN H.CHILD_FLEX_VALUE_LOW AND H.CHILD_FLEX_VALUE_HIGH

AND ( (B.SUMMARY_FLAG = 'Y'

AND H.RANGE_ATTRIBUTE = 'P')

OR (B.SUMMARY_FLAG = 'N'

AND H.RANGE_ATTRIBUTE = 'C')))

And (B.FLEX_VALUE IS NOT NULL)

Group By B.FLEX_VALUE_SET_ID,

H.PARENT_FLEX_VALUE,

B.FLEX_VALUE

UNION ALL

select

/*+NO_QUERY_TRANSFORMATION */

FND\_FLEX\_VALUE\_NORM\_HIERARCHY.FLEX\_VALUE\_SET\_ID FLEX\_VALUE\_SET\_ID,

null PARENT_FLEX_VALUE,

FND_FLEX_VALUE_NORM_HIERARCHY.PARENT_FLEX_VALUE FLEX_VALUE,

MAX(FND_FLEX_VALUE_NORM_HIERARCHY.CREATION_DATE) CREATION_DATE,

MAX(FND_FLEX_VALUE_NORM_HIERARCHY.LAST_UPDATE_DATE) LAST_UPDATE_DATE,

MAX(FND_FLEX_VALUE_NORM_HIERARCHY.CREATED_BY) CREATED_BY,

MAX(FND_FLEX_VALUE_NORM_HIERARCHY.LAST_UPDATED_BY) LAST_UPDATED_BY

from APPS.FND_FLEX_VALUE_NORM_HIERARCHY FND_FLEX_VALUE_NORM_HIERARCHY

where (1=1)

And (1=1 AND (NOT EXISTS(

(SELECT /*+ NL_AJ */ FLEX_VALUE FROM

APPS.FND_FLEX_VALUE_CHILDREN_V

B WHERE FND_FLEX_VALUE_NORM_HIERARCHY.FLEX_VALUE_SET_ID = B.FLEX_VALUE_SET_ID AND FND_FLEX_VALUE_NORM_HIERARCHY.PARENT_FLEX_VALUE = B.FLEX_VALUE

))))

And (FND_FLEX_VALUE_NORM_HIERARCHY.PARENT_FLEX_VALUE IS NOT NULL)

Group By FND_FLEX_VALUE_NORM_HIERARCHY.FLEX_VALUE_SET_ID,

FND_FLEX_VALUE_NORM_HIERARCHY.PARENT_FLEX_VALUE

) SQ_FND_FLEX_VALUE_HIER_PS

where (1=1)

(on target - an Oracle data warehouese 12.1.0.2)

insert #biapps.ETL_HINT_INSERT_VALUES C$_702522750_4_0

(

C1_INTEGRATION_ID,

C2_SRC_EFF_FROM_DT

)

values

(

:C1_INTEGRATION_ID,

:C2_SRC_EFF_FROM_DT

)

The above sequence takes just over 17 minutes. Then, in a subsequent step, it does one more insert that takes around 2 seconds ... also on the target data warehouse:

insert into SYFBI_DW.W_GL_SEGMENT_HIER_PS_PE

(

DATASOURCE_NUM_ID,

INTEGRATION_ID,

SRC_EFF_FROM_DT

)

select

DATASOURCE_NUM_ID,

INTEGRATION_ID,

SRC_EFF_FROM_DT

FROM (

select

#biapps.DATASOURCE_NUM_ID DATASOURCE_NUM_ID,

C1_INTEGRATION_ID INTEGRATION_ID,

C2_SRC_EFF_FROM_DT SRC_EFF_FROM_DT

from C$_702522750_4_0

where (1=1)

In the db-link-enabled****scenario, here is the code

(on source - an EBS database 11.2.0.4)

/* Db link option chosen and SDS not deployed */

create or replace view APPS.C$_704341750_4_0

(

C1_INTEGRATION_ID,

C2_SRC_EFF_FROM_DT

)

as select

TO_CHAR(SQ_FND_FLEX_VALUE_HIER_PS.FLEX_VALUE_SET_ID) || '~' || SQ_FND_FLEX_VALUE_HIER_PS.FLEX_VALUE || '~' ||

COALESCE(SQ_FND_FLEX_VALUE_HIER_PS.PARENT_FLEX_VALUE, '') C1_INTEGRATION_ID,

TO_DATE('01/01/1899 00:00:00', 'MM/DD/YYYY HH24:MI:SS') C2_SRC_EFF_FROM_DT

from

( /* Subselect from SDE_ORA_Stage_GLSegmentDimension_Hierarchy_Primary.W_GL_SEGMENT_HIER_PS_PE_SQ_FND_FLEX_VALUE_NORM_HIER

*/

select

/*+ USE_NL(B H) */

B.FLEX\_VALUE\_SET\_ID FLEX\_VALUE\_SET\_ID,

H.PARENT_FLEX_VALUE PARENT_FLEX_VALUE,

B.FLEX_VALUE FLEX_VALUE,

MAX(H.CREATION_DATE) CREATION_DATE,

MAX(H.LAST_UPDATE_DATE) LAST_UPDATE_DATE,

MAX(H.CREATED_BY) CREATED_BY,

MAX(H.LAST_UPDATED_BY) LAST_UPDATED_BY

from APPS.FND_FLEX_VALUES B, APPS.FND_FLEX_VALUE_NORM_HIERARCHY H

where (1=1)

And (H.FLEX_VALUE_SET_ID=B.FLEX_VALUE_SET_ID AND B.FLEX_VALUE BETWEEN H.CHILD_FLEX_VALUE_LOW AND H.CHILD_FLEX_VALUE_HIGH

AND ( (B.SUMMARY_FLAG = 'Y'

AND H.RANGE_ATTRIBUTE = 'P')

OR (B.SUMMARY_FLAG = 'N'

AND H.RANGE_ATTRIBUTE = 'C')))

And (B.FLEX_VALUE IS NOT NULL)

Group By B.FLEX_VALUE_SET_ID,

H.PARENT_FLEX_VALUE,

B.FLEX_VALUE

UNION ALL

select

/*+NO_QUERY_TRANSFORMATION */

FND\_FLEX\_VALUE\_NORM\_HIERARCHY.FLEX\_VALUE\_SET\_ID FLEX\_VALUE\_SET\_ID,

null PARENT_FLEX_VALUE,

FND_FLEX_VALUE_NORM_HIERARCHY.PARENT_FLEX_VALUE FLEX_VALUE,

MAX(FND_FLEX_VALUE_NORM_HIERARCHY.CREATION_DATE) CREATION_DATE,

MAX(FND_FLEX_VALUE_NORM_HIERARCHY.LAST_UPDATE_DATE) LAST_UPDATE_DATE,

MAX(FND_FLEX_VALUE_NORM_HIERARCHY.CREATED_BY) CREATED_BY,

MAX(FND_FLEX_VALUE_NORM_HIERARCHY.LAST_UPDATED_BY) LAST_UPDATED_BY

from APPS.FND_FLEX_VALUE_NORM_HIERARCHY FND_FLEX_VALUE_NORM_HIERARCHY

where (1=1)

And (1=1 AND (NOT EXISTS(

(SELECT /*+ NL_AJ */ FLEX_VALUE FROM

APPS.FND_FLEX_VALUE_CHILDREN_V

B WHERE FND_FLEX_VALUE_NORM_HIERARCHY.FLEX_VALUE_SET_ID = B.FLEX_VALUE_SET_ID AND FND_FLEX_VALUE_NORM_HIERARCHY.PARENT_FLEX_VALUE = B.FLEX_VALUE

))))

And (FND_FLEX_VALUE_NORM_HIERARCHY.PARENT_FLEX_VALUE IS NOT NULL)

Group By FND_FLEX_VALUE_NORM_HIERARCHY.FLEX_VALUE_SET_ID,

FND_FLEX_VALUE_NORM_HIERARCHY.PARENT_FLEX_VALUE

) SQ_FND_FLEX_VALUE_HIER_PS

where (1=1)

(on target - an Oracle data warehouese 12.1.0.2)

insert into SYFBI_DW.W_GL_SEGMENT_HIER_PS_PE

(

DATASOURCE_NUM_ID,

INTEGRATION_ID,

SRC_EFF_FROM_DT

)

select

DATASOURCE_NUM_ID,

INTEGRATION_ID,

SRC_EFF_FROM_DT

FROM (

select

#biapps.DATASOURCE_NUM_ID DATASOURCE_NUM_ID,

C1_INTEGRATION_ID INTEGRATION_ID,

C2_SRC_EFF_FROM_DT SRC_EFF_FROM_DT

from SYFBI_DW.C$_704341750_4_0

where (1=1)

The above sequence takes almost 2 1/2 hours. There are no subsequent insert steps.

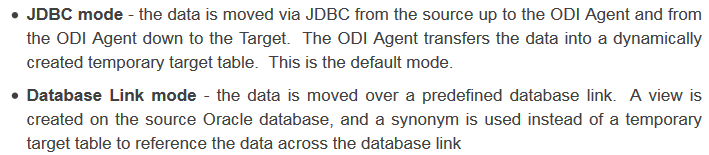

Here is a high-level summary (taken from: https://blogs.oracle.com/biapps/3-modes-for-moving-data-to-the-bi-applications-dw-from-a-source-application-database) between the two modes:

One thing I don't understand is why there is only such bad performance on this particular task. Others do not seem to be impacted in the same way. I would've thought that if it was the db link itself, we would see others with bad performance as well.

Thanks in advance for any thoughts on this. I'm happy to work with, and provide additional information (e.g. explain plans, traces, etc.) ...

Regards,

Charles