Hello,

I have a source table (partitioned and indexed) with approx. 156 Million records. I am trying to create a target table using CTAS method by reading records from the source table with a simple SELECT query that does simple LEAD/LAG operations on a date-column and it is taking over 8 hrs!!

Upon searching online, one developer recommended to try the MATCH_RECOGNIZE technique as it is claimed to run faster than the Analytic SQL functions on very large tables. I have not tested this yet as I need help in converting the SQL query into the MATCH_RECOGNIZE statement, which would result in the same result-set.

CREATE TABLE TB_TARGET

PARALLEL 8

NOLOGGING

AS

SELECT /*+ PARALLEL_INDEX(8) PARALLEL(8) */

PAT_ID,

VISIT_DATE_DT,

ROW_NUMBER() OVER (PARTITION BY PAT_ID ORDER BY VISIT_DATE_DT) AS ROW_NUM,

ED_UCC_DT,

LAG(ED_UCC_DT IGNORE NULLS) OVER (PARTITION BY PAT_ID ORDER BY VISIT_DATE_DT) AS PREV_ED_UCC_DT,

LEAD(ED_UCC_DT IGNORE NULLS) OVER (PARTITION BY PAT_ID ORDER BY VISIT_DATE_DT) AS NEXT_ED_UCC_DT

FROM TB_SOURCE; --Very large table!

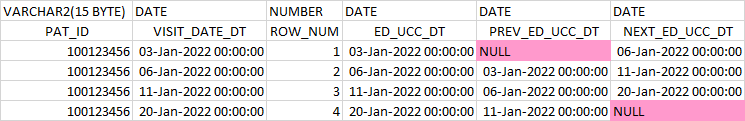

The result set for a single PAT_ID record (100123456) looks like this:-

Questions:-

1. How do I speed this query up when it runs over 156 Million records with multiple LEAD and LAG operations added into the above query on other columns in addition to the above column?

2. If the fastest solution is to use MATCH_RECOGNIZE, could you please help me with composing a SQL query statement such that it yields the same results as shown above (along with NULLs)?

3. Any other solution is welcomed as long as I can reduce the query execution time to an acceptable duration other than 8 hrs.

Thank you in advance.