Categories

- All Categories

- Oracle Analytics Learning Hub

- 31 Oracle Analytics Sharing Center

- 22 Oracle Analytics Lounge

- 252 Oracle Analytics News

- 45 Oracle Analytics Videos

- 16.1K Oracle Analytics Forums

- 6.3K Oracle Analytics Idea Labs

- Oracle Analytics User Groups

- 93 Oracle Analytics Trainings

- 16 Oracle Analytics and AI Challenge

- Find Partners

- For Partners

Where's the SQL Translation of OAS Functions?

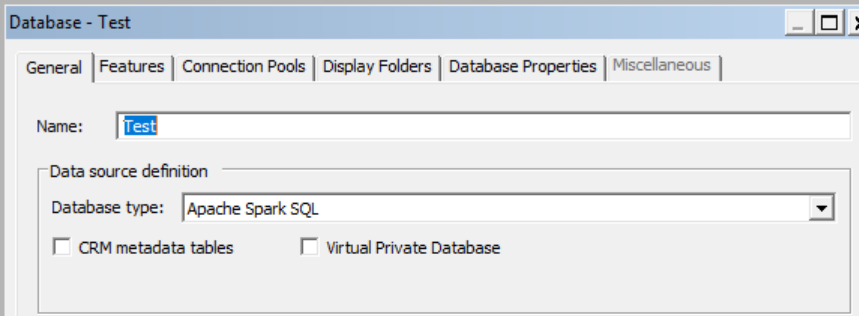

We are using OAS Analytics Server with Databricks Warehouse. I've created a physical server of type "Apache Spark SQL" (which is the closest Technology available) that connects via ODBC (Simba Spark Driver) - this basically works.

However, I noticed that some of the built-in date/time functions generate incorrect SQL syntax. Eg: WEEK_OF_YEAR (used as a formula in a report)

The effective query that's sent to the datasource is like

cast(date_format(T237283.open_date,'w') as int) as c7

it should be

weekofyear(T237283.open_date) as c7_test

(never mind the cast)

Is there any chance to change the SQL translation from date_format(…) to weekofyear ?

OAS 6.4, Linux RedHat

Best Answer

-

Hi,

Welcome to the Oracle Analytics community and forum.

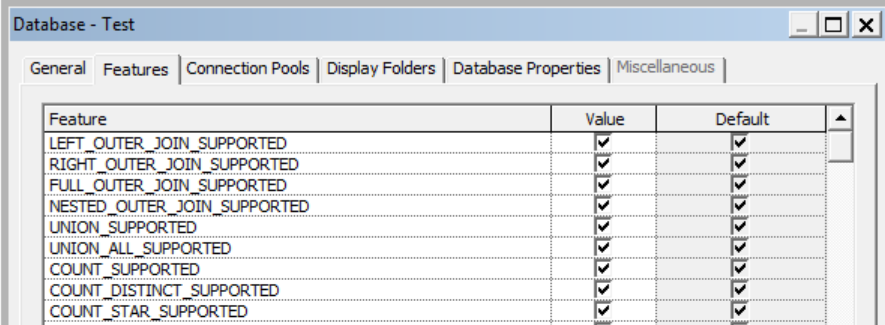

The best you can do is to change some settings in the "features" tab of the physical database object in the semantic model, that can impact how the system generate some queries (and there are some features related to extraction of the week of year from a date, worth a test…).

You can't see a list of mappings of LSQL functions to physical queries, you can't change them.

If you believe there is a bug and that for any kind of source of type "Apache Spark SQL" the LSQL function WEEK_OF_YEAR should be generated as

weekofyear, you can raise that as a bug I would say. But that's the tricky part: it should be true all the time, and not just if/when you use that physical database object to connect to your Databricks, because in that case it isn't a bug anymore…As alternative, you can try to look into using EVALUATE instead of the native LSQL function, and then use your own physical query code directly.

2

Answers

-

Thanks Gianni

Well, strange thing the "features" tab of the physical database's properties is empty and I can't add anything there. The "functions" tab only lets me enable/disable certain features and some formats. Nothing to manipulate there.

Looks like certain built-in functions can't be used with Spark/Databricks. Maybe we'll have to provide pre-defined attributes for use in reports….

Kind Regards,

Roger

0 -

Hi Roger,

Are you saying that this screen

is empty in your RPD?

There isn't a single feature listed for your database of type "Apache Spark SQL"?

If that's the case, it doesn't sound right at all…

I don't have a 6.4 model admin tool around, but I'm quite sure it wasn't a new thing in that version, therefore the features should exist.

Can you try maybe creating a brand new, empty, RPD and create a new physical database of that type in there to see if the features stays empty.

The feature existing in there is, for example, CALENDAR_EXTRACT_WEEK_OF_YEAR_SUPPORTED which should be enabled by default. If you don't have any feature … no clue what kind of default your OAS is applying. Maybe the syntax you don't like (cast of date_format) is because the system believes it doesn't support CALENDAR_EXTRACT_WEEK_OF_YEAR_SUPPORTED.

And you are right: not everything always work, sometime the translation from LSQL to physical query isn't exactly what you need. In those case you can, as you say, create attributes when you need them by using workarounds.

0 -

Nah, the "features" tab is filled, just like in your example. The "database properties" tab is empty. As for now, I'll go with the EVALUATE solution, this does the trick.

0 -

Hi Roger @User_2SRRN ,

I echo Gianni's welcome message.

Currently, Databricks is only supported for datasets via a JDBC driver for OAC. It is not supported for ODBC, Semantic Modeler, RPD

https://docs.oracle.com/en/cloud/paas/analytics-cloud/acsds/databricks.html#GUID-8AE7E9F6-5A3C-485A-B20D-86DBC6808233

For OAS 2025 (and earlier), it is not listed as certified

https://docs.oracle.com/en/middleware/bi/analytics-server/administer-oas/certification-supported-data-sources.html#GUID-ABD97D54-6157-4287-BEF8-1B6B7D9EC6C2

However, there is the concept of generic JDBC driver support.

Having said that, as a generic data source, you likely could workaround that using JDBC, instead of ODBC

Oracle does not provide the needed jar file and must be procured through the Azure vendor.- Obtain JDBC driver from vendor

- Install the JAR (link to doc)

- Create JDBC (JNDI) (link to doc)

- Load Data Sources (link to doc)

Next, configure the RPD Connection Pool.

Select "Apache Spark SQL" as the database type and not any other option of Azure or there will be errors at run-time.

Your results may vary since it isn't documented as certified, or steps, but it likely should work since direct dataset connection is using JDBC.

As mentioned, Evaluate function is likely the best method to use to function ship the native sql to the source.

As a side note, OAS 2022 (i.e. 6.4) is long out of error correction support, so no defect can be filed against that update. I would encourage you to update to OAS 2025 in case you do encounter a defect (bug) that needs to be addressed.

Hope that helps.0 -

Hi Steve

It's on-prem "OAS" (not OAC) running on a RedHat Server. We're aware it's outdated and plan to upgrade. Databricks via ODBC (Simba Spark Driver) and Technology Type Spark seems to work for most functions.

0