Categories

- All Categories

- Oracle Analytics and AI Learning Hub

- 43 Oracle Analytics and AI Sharing Center

- 19 Oracle Analytics and AI Lounge

- 282 Oracle Analytics and AI News

- 58 Oracle Analytics and AI Videos

- 16.3K Oracle Analytics and AI Forums

- 6.4K Oracle Analytics and AI Labs

- Oracle Analytics and AI User Groups

- 107 Oracle Analytics and AI Trainings

- 20 Oracle Analytics and AI Challenge

- Find Partners

- For Partners

Evaluating the difference between 2 datasets question (Method)

OAS 2023

Hi, I am trying to evaluate the difference between 2 datasets - It is essentially a hierarchy that may or may not be updates throughout the day, and faults often occurs because of someone putting something in the hierarchy that is not properly placed or similar. When stuff breaks down, it would be very helpful to know ahead of time that a change was indeed made. The hierarchy is just a flat table with rows and columns, not a hierarchy with levels as in OAS.

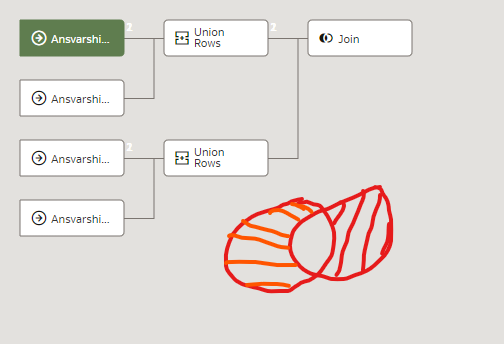

What I have done is this:

Made a subject area A from the subject area the hierarchy exists in. Made a data flow that loads all data from A to data set A1 that is scheduled to run every other day + data flow that loads all data from A to data set A2 that loads every other other day. The result is 2 data sets that contains the hierarchy from day 1 and day 2.

Now I want evaluate these if they are different, and want to make an analysis that will notify me if there are any rows at all in the analysis. I am struggling a bit to understand how to make this join - As I cannot see how to essentially create left + right anti-join so that I get all the rows that are different from each other.

I am able to make a left outer join (2 upper at the first stage of the data flow) and a right outer join (2 lower), but when I try to join these again the format is not very helpful.

The format ends like this:

Columns: Col1| Col2| Col3| Col1| Col2| Col3

Row 1: a|b|c|null|null|null

row 2: null|null|null|a|b|c

In my head there should be 3 columns with 2 rows, not 6 columns.

I am guessing that I am doing something wrong, or should be using another method altogether - I am making quite a few parts in this process to make it work the way I want, but currently I am not able to end up with a table with 3 columns that states: These are the differences between these 2 data sets.

Suggestions? (I added a picture of my current "model" that I want to implement)

Best Answer

-

Hi Kristian,

First I must say that I don't use the dataflow joins because "they don't speak sql"... (Keep rows, Input 1 "All rows" or "Matching rows", Input 2 "All rows" or "Matching rows" : call that thing what it is, inner join, left outer, right outer or full outer join). I generally do this job in the database...

I imagine that if you select "dataset day 1", "dataset day 2" and join them with "All rows" on both inputs, this will be a full outer join.

What you then want to do is to filter out all the rows where the join condition is matched, adding a filter step. This will remove all the rows that didn't change between the 2 datasets, and you have the same problem as you are facing now: 6 columns. (You get the same as what you have now, but it is "smaller" to write, assuming the first join really did a full outer join.)

You need to select the not null column between the 2 datasets, to turn the 6 columns into 3. Add the "Transform column" step to use the IfNull() function, and you can do IfNull(dataset1.col1, dataset2.col1). Then you can add the select column step to get rid of all the columns you don't need anymore (to keep the final dataset clean).

And this will then turn your 6 columns back to 3 columns. You can also add a CASE WHEN column that tells you "row in dataset1 but not in dataset2" or "row in dataset2 but not in dataset1" to know where the difference is from.

I hope it does make sense, it's easier to do than to describe :D

1

Answers

-

It turns out that it was not that important to get them in the same dataset after all, as it will only indicate a possible error source.

My solution was neither elegant, nor perfect yet still good enough.

Thank you for your contribution to my thinking, Gianni!

0