Categories

- All Categories

- Oracle Analytics and AI Learning Hub

- 43 Oracle Analytics and AI Sharing Center

- 19 Oracle Analytics and AI Lounge

- 283 Oracle Analytics and AI News

- 60 Oracle Analytics and AI Videos

- 16.3K Oracle Analytics and AI Forums

- 6.4K Oracle Analytics and AI Labs

- Oracle Analytics and AI User Groups

- 108 Oracle Analytics and AI Trainings

- 20 Oracle Analytics and AI Challenge

- Find Partners

- For Partners

How to get unique rows from Dataflow out

I have a dataflow, and I have joined few tables and select column which I need final output.

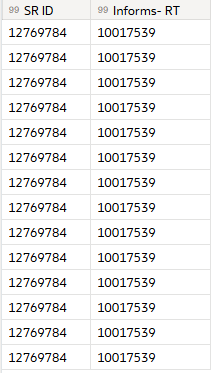

Due to joining process, it is creating multiple rows which are relevant before selecting columns. But after selecting columns, I prefer to have unique rows in output based on columns and rows not on previous join tables.

Any suggestions are appreciated.

Currently when we select columns output is as below image. I expect, output is single row after selecting the column.

Currently I am using bellow workaround.

Create another workflow with output of first dataset of dataflow.

Add twice same table and union with unique rows to display.

It serves the purpose but repeated work and difficult to manage multiple dataflows.

Best Answer

-

Hi,

"Annoyingly" the data flows steps don't use common database operation names, therefore if there is a "unique" step it has a weird name that I missed.

What you could do is to add a new column that you just set to a value of 1, then you use an "Aggregate" step where you move all your real columns to the "group by" part and only keep the new column you added with a value of 1 as aggregate (doesn't matter what aggregation you use).

The "group by" will make your rows unique.

Then you can remove the aggregated column or just ignore it in your usage of the resulting dataset (maybe one day in the future you will find a meaning of that count of rows that have been made unique, but if not, just get rid of it to avoid mistakes).

This will do the job…

Apparently in OAC I can't add an aggregate step with only "group by" and no aggregate, which is a valid and standard way to get unique rows, I would call that a missing feature more than a bug, because somebody didn't think about how standard database operations work when re-inventing them in data flows.

2

Answers

-

@Gianni Ceresa Thanks for quick clarification and understanding the core issue. I have filed a Idea for it.

Workaround you suggested may not work for larger datasets which we have typically.

I come across this issue when my Dataflow is failed. After investigation of dataflow failure, I found the reason of duplicate values. Anyway, I will play around it and let you know.

Actually, my workaround of additional Dataflow with union with unique rows seems simpler and does the job.

2 -

For up-voting, it is here:

Overall, if you have large datasets that makes dataflows to fail, it is maybe a sign you are pushing a bit too much. In my opinion datasets and dataflows are for small to medium size work. If large, nothing will ever work better than a database.

A database table with 1000+ columns and a billions of rows? Easy, not an issue, the database is built for that. A dataset is not built or designed for that.

The same for dataflows: a SQL query, a view, a simple PL/SQL based "ETL" will be faster and more powerful than dataflows. Because here again dataflows are lightweight ETL-task, but it's really done in a fairly poor way. Simple things like indexes, partitions etc. are what makes a database win every single time for that kind of job when size is involved.

I saw a number of times datasets and dataflows being abused to replace a data warehouse: that approach is very risky and would require an architect to have a look into it to validate the concept when increasing in size.

But every usage is different, with different constraints…

3